I am Dmytro Nakonechnyi, ML Engineer at Uptech. I work with healthcare artificial intelligence (AI) projects at different stages, from early PoCs to production systems used by real patients and medical teams.

What if I told you that the cost of AI in healthcare can drop by up to 30%? What’s more, there’s no need to sacrifice accuracy or safety. Such a cost saving is possible thanks to early planning along with better choices around data, models, infrastructure, and scope.

Many startups and SMBs approach AI with the same question: “How much does AI cost in healthcare, and where does the money actually go?” In healthcare, the answer depends more on practical constraints, such as data readiness, compliance, and long-term maintenance.

In this article, I break down real AI costs in healthcare, explain what drives them, and show how teams can reduce expenses without risking results.

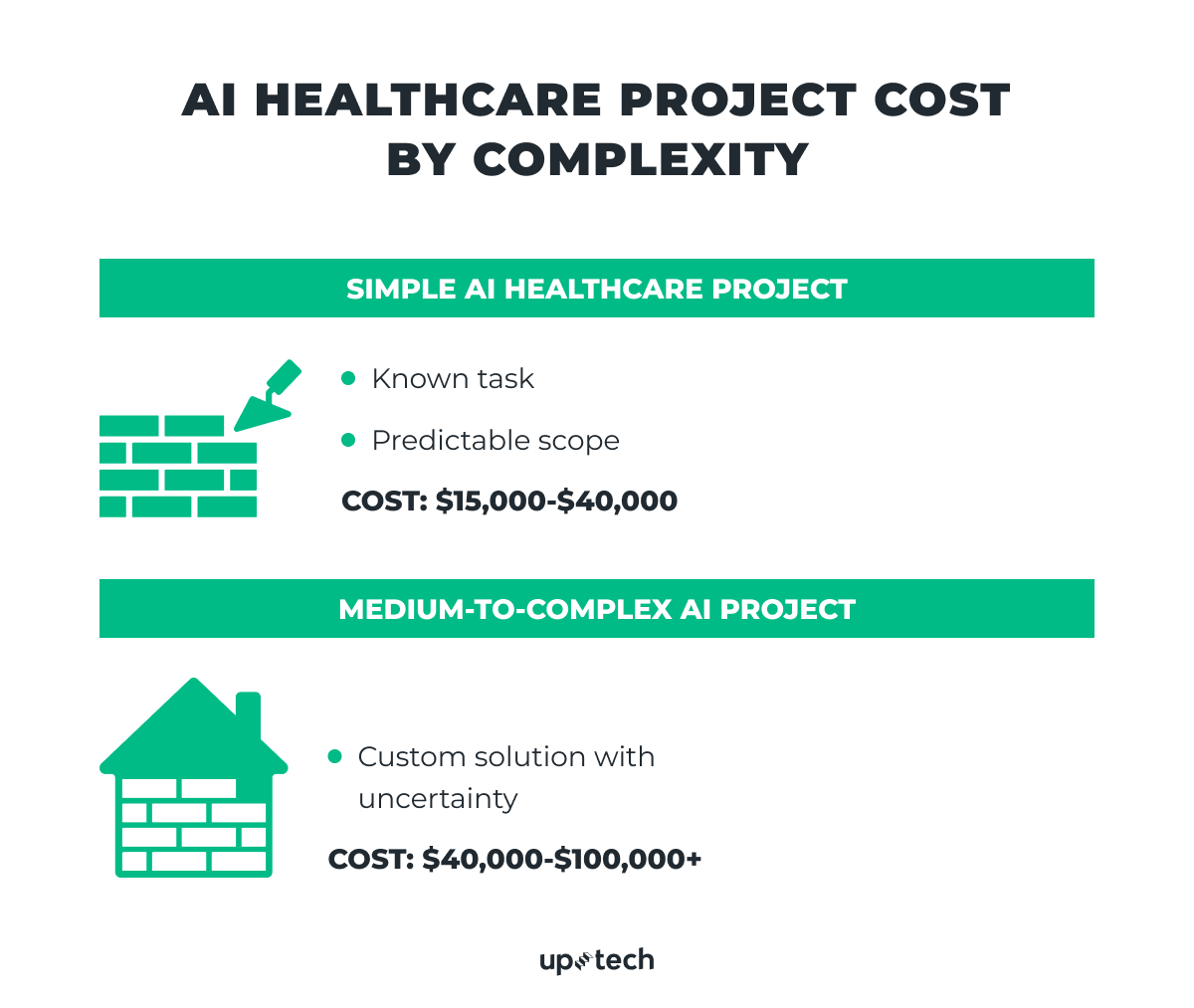

The Cost of Implementing AI in Healthcare Based on Project Complexity

The AI healthcare implementation costs vary widely, just like AI projects themselves. The latter differ in scope, data readiness, and technical uncertainty. A solution that relies on an existing model and clean data will look very different from a custom system that requires research, data preparation, and long-term maintenance.

So, I would like to start with a practical pricing breakdown based on the complexity of real healthcare AI projects. Here, I single out 2 types: simple healthcare AI projects and medium-to-complex ones.

Simple AI healthcare projects (PoC or MVP)on pre-trained models

Typical budget: $15,000-$40,000

Simple AI projects usually target a well-defined and familiar task. They mainly rely on readily available pre-trained models. Existing models help keep costs under control. A custom solution for the problem where a known existing go-to solution cannot be employed adds extra steps. Teams must collect data, train the model, validate results, and set up infrastructure. Each step increases both the timeline and the budget.

Common examples include OCR (Optical Character Recognition) for medical documents or image-based classification. More complex use cases, such as stroke prediction based on remote sensor monitoring, usually require a custom dataset and training a dedicated ML model.

OCR for medical documents

OCR-based systems extract structured data from medical documents such as referrals, prescriptions, lab reports, and insurance forms.

One example is a medical document processing system we built for a private diagnostic clinic in the US. The solution uses OCR combined with self-hosted LLMs to process millions of documents in a HIPAA-compliant environment. It reduced document processing time by 34%, doubled monthly patient capacity, and improved overall patient service satisfaction.

This type of system requires ongoing model monitoring and updates as document formats and input quality change.

Image-based classification

Image classification relies on computer vision models to analyze medical images such as X-rays, CT scans, or MRI results. A common use case is pneumonia detection from chest X-rays. These systems require high-quality labeled datasets, careful model validation, and strict accuracy thresholds.

As an example, let’s take a medical image processing system we built for a private diagnostic clinic in the US. The clinic relied on manual image analysis, which slowed diagnostics and increased the risk of errors. We helped design and implement an AI-powered solution that processes medical images and converts them into structured, actionable data.

The system supports both 2D and 3D medical images, applies image enhancement and segmentation, and classifies conditions such as pneumonia from X-ray scans. To meet healthcare requirements, we added automatic anonymization of personal data embedded in images and ensured full compliance with HIPAA standards. As a result, the clinic reduced image processing time by up to 30% and enabled doctors to make faster, more accurate decisions without changing their existing workflows.

In these cases, the problem space is clear. The expected accuracy can be measured against a custom benchmark from the start.

When a client provides clean and sufficient data, ML and data science teams can often rely on pre-trained open source models and external providers. They can relatively easily train an ML model on the provided data. The work then focuses on data analysis, validation, light adaptation, and deployment. The model usually runs on cloud infrastructure such as AWS or another managed provider.

At this level, the scope includes:

- A clear definition of the AI task, such as text recognition or image classification.

- Creation of a custom evaluation benchmark and defining metrics. (In some cases, this stage may also include training a lightweight custom model without extensive research or data preparation.)

- Deployment of the model to production.

This type of project works well when the solution is known in advance, and risk remains limited.

Medium-to-complex AI healthcare projects (more elaborate custom AI solutions)

Typical budget: $40,000-$100,000+

Medium-to-complex projects require custom solutions. In such a project, it can be hard to predict the final cost at the start. The outcome depends on the task type, data availability, data quality, and how well the model can generalize across real-world inputs.

This is where data preparation takes the lead and requires the most effort. Teams may need additional specialists for labeling, normalization, or validation. In some cases, projects require third-party datasets, which can add a high cost. In my experience, there was a project where data acquisition alone cost about $100,000.

These projects also introduce technical risks:

- Input data may change over time, which leads to data drift

- Models must handle a wider range of formats and edge cases

- Accuracy may drop as usage patterns evolve

For example, a system may initially process PDFs, but later receive a PDF in low-resource languages with medical terminology. This change requires model adaptation and retraining.

Custom ML pipelines become necessary. Teams design training workflows, select suitable model architectures, and often train multiple models on the same dataset to compare results. GPU infrastructure adds further cost. Benchmarks guide decisions, but uncertainty remains higher than in simple projects.

Task uniqueness increases complexity even more. Similar solutions may not exist, and there may be no clear reference approach. Teams understand the direction but must validate assumptions through experimentation.

This category defines the upper range of the cost of artificial intelligence in healthcare and requires careful planning before development begins.

Okay, project complexity is only part of the picture. In practice, things are a bit more complicated than this division, and several underlying factors influence AI budgets in healthcare. Let’s look at the key ones in more detail.

3 Key Factors that Impact the Cost of AI in Healthcare

The cost of AI implementation in healthcare depends on several core factors that shape both scope and risk. In real projects, these factors define the cost structure of AI healthcare startups more clearly than just categorizing software into simple/complex or feature count alone. Below are the key elements that most strongly influence the pricing of medical AI.

Data availability and quality

As I mentioned earlier, data plays a central role in AI projects. In most healthcare cases, model architectures already exist and work well in theory. The main limitation is not the model itself, but the data.

There is a reason why data preparation can take up to 80% of the total effort in AI and ML projects. Healthcare data rarely comes in a single, clean format.

ML developers usually work with a mix of clean and noisy data. Clean data follows a consistent format and requires minimal preparation. Noisy data includes missing fields, inconsistent values, scanned documents or handwritten content, low-quality audio content, and highly incorrectly labeled data. This type of data requires additional preprocessing, validation, and filtering before it feeds into AI models.

Data volume also affects healthcare AI pricing. Some projects start with very limited datasets, which restricts model training and accuracy. Other projects have enough data in theory, but the quality of this information remains low.

In many cases, additional effort is required before model training can begin. Teams apply several techniques during data preparation. These include data cleansing to remove errors and duplicates, normalization to bring values into a consistent format, labeling to support supervised learning, and validation to confirm data completeness. For healthcare data, anonymization adds another mandatory step to protect patient identity and meet regulatory requirements, such as HIPAA.

By the way, if you want to learn how to build GDPR- and HIPAA-compliant products from day one, I suggest you read our technical guide on this topic.

Public datasets exist, but they rarely solve the problem fully. Many lack diversity, fail to reflect real clinical workflows, or come with licensing restrictions. Speaking of the latter, many datasets come with non-commercial or restricted licenses. These limitations prevent their use in production healthcare products.

In such cases, teams must either acquire licensed datasets or generate new data. Both options add cost and complexity. Licensing terms also affect long-term usage and scaling plans, which directly influence startup cost planning.

These limitations directly affect the cost structure of AI healthcare startups, since teams must either acquire licensed data or invest in custom data collection.

All of these factors explain why data preparation often becomes the most expensive and time-consuming phase. They also explain the real impact of AI on healthcare costs: AI reduces operational costs only after teams invest enough effort to make data usable and reliable.

Task type and problem definition

Task type directly affects how much AI costs in healthcare and how predictable that cost remains over time. Some AI tasks follow well-established patterns. Others introduce uncertainty long before development reaches production.

Common AI tasks in healthcare

Well-known tasks such as text extraction from medical documents, image classification, or basic prediction rely on proven technical approaches. These tasks usually allow clearer estimates because teams understand the expected inputs, outputs, and evaluation methods. In such cases, healthcare AI implementation costs stay more stable and easier to control.

Uncommon AI tasks in healthcare

Unique or less common tasks change the picture. When no clear reference solution exists, teams must test multiple approaches to identify what works. This phase often includes experiments with different models, data representations, and evaluation strategies. Exploration adds time and cost before any production-ready solution appears.

In healthcare, task definition also affects compliance and safety requirements. A system that supports internal analytics differs greatly from one that influences patient-facing decisions. These differences shape the impact of AI on healthcare costs, since higher-risk tasks require stricter validation and monitoring.

Model choice and customization level

Model choice has a direct effect on scope, timeline, and the cost of AI implementation in healthcare. Most AI projects start with a decision between pre-trained models and custom-built ones.

Using pre-trained models

Pre-trained models are models that others have already trained on large datasets. These include open-source models and commercial APIs. They work well when the task is common, and the input data matches what the model expects. Examples include text extraction, basic document classification, or standard image analysis. These models reduce early effort because teams skip model training and focus on integration, validation, and deployment. This approach usually supports faster delivery and lower initial cost.

This is possible only when the capabilities of a pre-trained model are sufficient, which teams confirm through benchmark testing against defined ML and business metrics.

Building and training custom ML models

Custom models follow a different path. Teams choose this option when pre-trained solutions fail to meet accuracy or performance requirements. This situation often appears in healthcare, where data differs from public benchmarks or where outputs must meet strict domain-specific rules.

Custom development requires additional work across several areas. Teams choose the model type for the task, build training and evaluation pipelines, define custom benchmarks, and provision infrastructure for training and inference. Each step adds time and increases complexity. This shift changes both budget structure and risk profile.

At Uptech, we have worked on projects where custom AI solutions were the only viable option. In such cases, off-the-shelf models could not handle proprietary data formats, domain-specific logic, or regulatory constraints. Custom development allowed us to reach required accuracy levels and support real production use, but it also required deeper research and longer timelines.

Model choice should follow task and data analysis, not the other way around. A pre-trained model works best when constraints stay low. A custom model makes sense when quality, safety, or uniqueness matter more than speed of development.

Hidden and Additional AI Costs in Healthcare

AI budgets in healthcare often extend beyond initial development. When you estimate digital health solution pricing for AI, it’s important to take into account recurring and indirect costs that can affect the total investment after launch.

Ongoing infrastructure and model hosting

Every AI model requires a production environment. The model often must run on servers, handle requests, and scale with usage. Depending on traffic and compute needs, monthly infrastructure costs often range from $100 to $10,000+. This becomes especially relevant for AI features with frequent user interaction.

Let’s say there’s an AI chatbot that analyzes medical conditions. It may handle long conversations and repeated requests. You never know how many requests each individual user will make. And each interaction consumes valuable resources. These usage-based costs grow with adoption and can significantly affect AI digital health solution pricing if not planned upfront.

In contrast, some solutions require minimal or even no cloud infrastructure. For example, a fitness bracelet with an AI analyzer can generate personalized workout plans based on sensor data and run entirely on the user’s device. The Uptech team can assess early whether your problem type will require significant infrastructure investment.

Token usage and variable AI workloads

AI workloads rarely stay static. As more users rely on AI features, token consumption increases.

Tokens are small units of text that AI models process when they read input and generate responses. This includes user messages, documents sent to the model, and the model’s own replies. The more text the AI processes and produces, the more tokens it uses, which directly affects usage-based AI costs.

This applies to chat-based assistants, document analysis, clinical summaries, and automation tools. Variable usage makes cost prediction harder and introduces ongoing expenses that scale with real-world use.

This pattern also appears in operational use cases, such as AI-driven hospital procurement pricing, where AI systems analyze supply data, vendor inputs, and demand forecasts on a regular basis. Frequent model execution adds recurring compute costs that teams must account for.

Data drift and retraining

Another hidden cost comes from data drift. Over time, input data changes. New document formats, new devices, or new user behavior patterns appear. When the model encounters data it has not seen before, metrics drop.

To address this, teams need to fine-tune or retrain the model on updated data. In some cases, parts of the pipeline require redesign to support new inputs. This work often brings the ML team back into the project multiple times after launch and adds unplanned cost.

Ongoing ML support

AI products do not reach a stable state after launch. Once a model goes into production, it requires regular monitoring to track metrics under real workloads. Without this, teams may miss gradual performance degradation or failures in edge cases.

Infrastructure also needs ongoing attention. Teams must monitor compute usage, optimize resource allocation, and adjust scaling rules as demand grows. Changes in model APIs or security requirements may force technical updates even when product features remain the same.

In practice, this means AI initiatives require continued involvement from ML engineers after launch.

When you plan for these costs early, you can avoid budget gaps and support more sustainable AI adoption in healthcare.

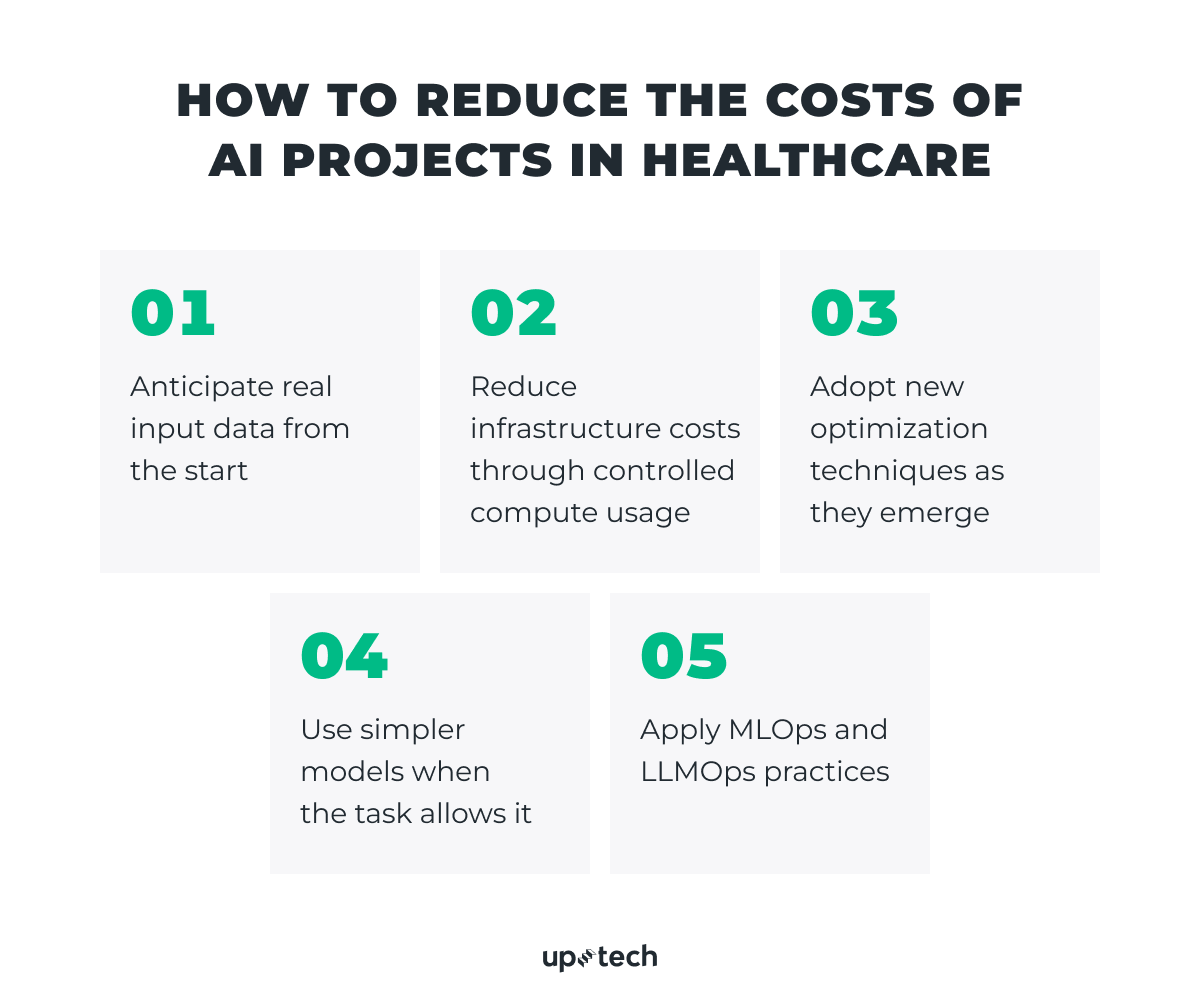

Tips on How to Reduce the Costs of AI Projects in Healthcare

Okay, as I said at the very beginning, it is possible to reduce the cost of implementing AI in healthcare. For this, there must be concrete technical decisions made. Based on real project experience, cost savings come from how teams handle data, infrastructure, model choice, and AI operations, not from cutting required steps.

Below, I have rounded up several useful recommendations that can help reduce the cost of AI in healthcare projects big time.

Anticipate real input data from the start

Many AI projects become expensive because input data behaves differently in production than expected. Users upload files in new formats, submit incomplete data, or use the system in ways not covered during initial testing.

When teams define what users will actually submit to the model and adapt the solution to these scenarios early, they reduce the need for repeated retraining and pipeline changes later. This approach doesn’t affect output quality and helps keep long-term AI costs in healthcare under control.

Reduce infrastructure costs through controlled compute usage

Healthcare AI infrastructure costs often grow due to continuous resource usage. Training jobs, real-time processing, or heavy inference do not always need to run all the time.

At Uptech, we reduce infrastructure expenses by scheduling GPU workloads to run only during specific periods if the task can allow to do this. This limits idle compute usage and lowers monthly costs while keeping model performance unchanged.

What we do to reduce cloud infrastructure costs

- Use serverless approaches, such as AWS Lambda, to avoid constant server billing

- Apply batching during inference to process multiple requests in a single run

- Optimize application and model code to remove performance bottlenecks

- Use model quantization and pruning to make inference faster and less expensive

- Cache repeated results with tools like Redis when responses recur frequently

- Deploy models on user devices when possible to enable fast inference and reduce infrastructure costs

Adopt new optimization techniques as they emerge

AI systems evolve fast, and cost-efficient solutions depend on keeping up with current best practices. We actively track new approaches to token and infrastructure optimization and apply them when they make sense for a specific problem.

One example is the TOON format, a structured approach to token optimization for agent-based systems. It helps reduce unnecessary token usage. I covered this topic in a dedicated keynote, where I discussed how such formats improve efficiency without degrading output quality.

In practice, this means we continuously evaluate new frameworks, formats, and optimization techniques. When a proven approach appears, we test it and adopt it if it helps solve the problem faster, cheaper, or more reliably. The goal stays the same: deliver the required result with the least possible overhead, without sacrificing correctness or compliance.

Use simpler models when the task allows it

Not every healthcare task requires large language model (LLM) development. Some problems work better with smaller or task-specific models.

If it’s possible for you to select simpler models that meet accuracy and compliance requirements, you reduce training time, inference cost, and infrastructure load. This choice greatly benefits AI-driven healthcare cost savings.

Apply MLOps and LLMOps practices

AI systems require ongoing support after launch. MLOps and LLMOps practices help teams monitor performance, manage retraining, and control infrastructure usage.

In simple terms, what do these terms mean?

- MLOps covers how machine learning models get deployed, monitored, and updated in production.

- LLMOps focuses on managing large language models, including prompt updates, token usage, and model versions.

With these processes in place, teams avoid unnecessary retraining, detect issues earlier, and reduce unplanned work. This leads to more predictable costs over time.

If you want to get a clearer picture of these and other aspects of the cost-effectiveness of AI in healthcare, reach out to us and get quality AI consulting services.

Where teams should not try to cut costs

You should not reduce effort in data quality, evaluation, or compliance. Poor data or weak validation leads to inaccurate output and repeated fixes, which increases costs later.

When it comes to AI in healthcare, cost reduction works best when you make informed technical choices early and revisit them as the product evolves.

How to Start AI Development in Healthcare with a Limited Budget?

If your budget is limited, the safest way to start AI development in healthcare is to narrow the scope as much as possible. Trying to build a full product with multiple AI features is tempting, it really is. But it’s better to start with a PoC or MVP that focuses on one concrete ML task.

Read our guide on how to build an MVP that will work.

At this stage, the goal is simple: check whether the AI feature you have in mind actually works in real conditions and solves a real problem.

A practical starting point usually looks like this:

- One AI use case tied to a specific business need

- Clear criteria for success

- Pre-trained or ready-made models, if they meet your requirements

Custom models, complex pipelines, and heavy infrastructure make sense later, when you already know what works and why.

Let’s take, Clearly, a mental health marketplace built by Uptech’s internal R&D team, for example. Our team followed this approach. The first version of the product launched as a small MVP with a landing page, therapist profiles, and basic messaging. At that point, the focus stayed on validating demand and core user flows.

AI was not part of the initial release. The team added AI-powered features later, after real usage data became available and product-market fit was clear. This made it easier to introduce AI where it added value, without increasing early risk or cost.

The takeaway is straightforward: start small and stay focused. Validate the core functionality first. If AI is your main goal, make that single AI feature the core of your MVP. Everything else can wait until you have proof that the idea works.

Do you want to validate an AI idea or estimate the cost of an MVP before committing to full development?

Contact us for a practical, experience-based assessment.