You’ve decided your product needs generative AI. Now comes the harder part: figuring out how to make it happen.

The urgency is real. Since ChatGPT made generative AI mainstream, companies across industries have rushed to add similar AI to their products.

As the co-founder and tech lead, I’ve seen this space grow from a niche field into a mainstream force. RSM reports that 91% of middle-market companies now use generative AI, up from 77% last year.

At Uptech, we helped to build quite a few AI-powered tools, including:

- Dyvo.ai — AI-driven image generation for product visuals and avatars.

- Plai — an HR platform with an AI assistant for smarter people management.

- Hamlet — an AI-powered text summarization tool.

- Presidio Investors — automated data pipelines with AI for investment insights.

Based on our experience, I’d like to share some insights that can help you approach generative AI development with confidence.

This guide is primarily aimed at startups, product managers, entrepreneurs, and teams who want to integrate AI into their digital products, while providing as many technical details as appropriate. You can skip the basics and jump straight to the actual steps.

Let’s dive in.

What is Generative AI?

Generative AI is a set of deep learning architectures that can create new content based on what they’ve learned from the existing training data.

During the training, the AI algorithm learns specific patterns from the provided samples, remembers them, and uses the learned patterns to create new outputs: text, images, videos, audio, or even code.

Modern generative AI took off in 2014 with the introduction of Generative Adversarial Networks (GANs), and gained momentum in 2017 with the arrival of the transformer architecture.

Today, generative AI tools like ChatGPT, Midjourney, Dall-E, and Gemini use the power of various neural networks, including diffusion networks, GANs, Transformers, and Variational Autoencoders, to produce new, human-like content.

It will be helpful to examine each of these in more detail.

Main generative AI models

Diffusion networks

Diffusion models are a type of generative AI that learn how data changes when noise (random disturbances) is gradually added. Then, they reverse this process to generate new content such as images or sounds.

You can imagine it like restoring an old painting: over time, dust and cracks cover the image until it’s almost unrecognizable. Diffusion models learn this corruption process during training. Later, they can start with random noise and step by step remove it, eventually revealing a new, realistic image that wasn’t in the training set.

This approach powers some of today’s most popular AI tools, including Stable Diffusion, DALL-E, and Midjourney, which can generate highly detailed visuals from simple text prompts.

Diffusion models are best suited for creative industries, marketing, design, and any domain where high-quality image or video generation is key. Their advantage is photorealism and detail, but they require significant computing power and can be costly to train at scale.

Generative adversarial networks (GANs)

Generative Adversarial Networks (GANs) use two competing neural networks — the generator and the discriminator — trained together in an adversarial process.

The generator produces synthetic data (such as images) from random noise. The discriminator evaluates these samples and estimates the probability that each one is real.

When dealing with images, both networks are often built using convolutional neural networks (CNNs). Training works like a contest: if the discriminator identifies a sample as fake, the generator adjusts its parameters. If the generator successfully produces an image that fools the discriminator, the discriminator also learns from the mistake.

Through many iterations, both networks improve. A GAN is considered successful when the generator produces outputs that are indistinguishable from real data, to both the discriminator and human observers.

GANs are highly effective for generating realistic images, videos, and even synthetic data (for training other AI models). They’re powerful for data augmentation, style transfer, and design, but are known to be difficult to train and can sometimes produce unstable results.

Transformer-based models

Transformer models, introduced by Google in 2017, have become the go-to architecture for natural language processing (NLP). They are the best choice if you need software for translation or text generation.

Transformers work with sequences, such as text or speech, and predict what comes next based on the context. Popular examples include GPT-4 by OpenAI and Claude by Anthropic.

Here’s how they work in a few steps.

First, the input text is broken into tokens — words or subwords. Each token is then turned into a numerical embedding, which captures its meaning. Embeddings allow the AI to find information based on context, not just keyword matches. For example, a user searching for "running shoes" could also see results for "athletic footwear" if embeddings are used. Embeddings provide better search, personalization, and retrieval processes across AI applications. To preserve syntactic structure, positional encoding is added to help the model understand the order of words in a sentence.

The model then runs the data through a neural network made of two key parts. The self-attention mechanism looks at how each token relates to others in the sequence, even if they’re far apart. The feedforward network refines that information using patterns learned during training.

This process repeats through multiple layers, helping the model build a deep understanding of the input. At the end, a softmax function selects the most likely next token. That output is added to the sequence, and the cycle continues.

Transformers are the most versatile of all generative models. They power chatbots, copilots, recommendation systems, and knowledge search. Their strengths are scalability, adaptability, and accuracy in language tasks, but they are expensive to train and can sometimes generate hallucinations (plausible but false outputs).

Variational Autoencoders

Variational Autoencoders (VAEs) are a type of generative model made up of two main parts: an encoder and a decoder.

- The encoder compresses input data into a latent space — a simplified representation of the essential features. Unlike fixed values, this latent space is modeled as a probability distribution, which introduces controlled randomness and flexibility.

- The decoder then reconstructs data from the latent space, generating new outputs that resemble the training data without reproducing it exactly.

You can think of latent space as the data’s DNA. It holds the core information needed to recreate something similar to the original, but not identical. Even a tiny tweak can lead to a very different result.

The decoder takes that compressed “DNA” and generates new content that resembles what the model has seen during training, without copying anything exactly.

VAEs are well-suited for anomaly detection, medical imaging, and data compression tasks. They are less resource-intensive than diffusion models or transformers, but their generated outputs are usually less sharp and detailed, making them better for behind-the-scenes analytics than consumer-facing creative tools.

Retrieval-augmented generation

In addition to these core models, there is a growing interest in techniques that improve how generative models work in real-world applications. One such approach is retrieval-augmented generation (RAG).

RAG pairs a language model with an external knowledge source. Instead of relying only on what the model remembers from training, it retrieves relevant information from databases, documents, or APIs in real time. This makes the generated output more accurate, grounded in facts, and context-aware. This is especially valuable in industries like healthcare, law, or customer support.

While RAG is not a generative model itself, it enhances the quality and reliability of outputs produced by models like transformers, helping bridge the gap between static model knowledge and up-to-date external data.

RAG is a strong choice for industries that require factual accuracy, such as healthcare, law, and customer support. It lowers the risk of hallucinations and improves trust, but it depends on the quality of the external data source.

What Can Generative AI Do?

One of the most important tasks before building generative AI applications is understanding their capabilities. It helps you determine if it truly makes an impact on your operation and does not merely serve as a good-to-have accessory.

Here's a non-exhaustive list of practical generative AI applications in business.

- Image and video generation. Generative AI can handle retouching, background removal, upscaling, and even produce visuals from scratch. For example, we built Dyvo, an image editor with AI capabilities, to help users generate unique avatars from selfies in seconds.

- Text generation and summarization. From marketing copy and product descriptions to translations and condensed reports, AI helps teams move faster with content-heavy work. See our Hamlet case study for an example.

- Conversational AI (chatbots and assistants). Companies can use generative AI technologies to build smart chatbots that can resolve customer queries, guide onboarding, and act as copilots for employees.

- Data-to-structure conversion. GenAI can turn unstructured information into structured formats like JSON, Figma files, or standardized records, so teams can actually use it.

- AI monitoring. Generative AI can process massive amounts of data and surface anomalies or unusual patterns, such as fraud detection. This helps organizations catch issues early and act on insights that humans might miss.

- Audio and speech generation. Generative AI can produce lifelike voice synthesis, dubbing, music composition, and personalized sound experiences.

Generative AI across industries

Generative AI has crossed into the mainstream, opening entirely new ways of working across industries:

- Human resources. Platforms like Plai generate personalized career development plans and automates performance review insights.

- Finance. Generative AI consolidates and summarizes financial data, generating reports that support planning and investment.

- E-commerce. AI powers product discovery chatbots, personalized recommendations, and brand-consistent customer support.

- Healthcare. GenAI produces patient-friendly summaries, automates clinical documentation, and reduces administrative overhead for providers.

- Real estate. AI generates property listings, creates virtual staging visuals, and personalizes recommendations for buyers and renters.

- Logistics. It predicts demand, generates optimized delivery routes, and automates scheduling to improve supply chain efficiency.

- Insurance. AI generates claim summaries, automates document reviews, and powers chatbots that guide customers through policies and coverage options.

And this is just the beginning. As adoption accelerates, new applications are emerging every quarter. If you want to figure out how to build generative AI from scratch, the next section is what's worth your special attention.

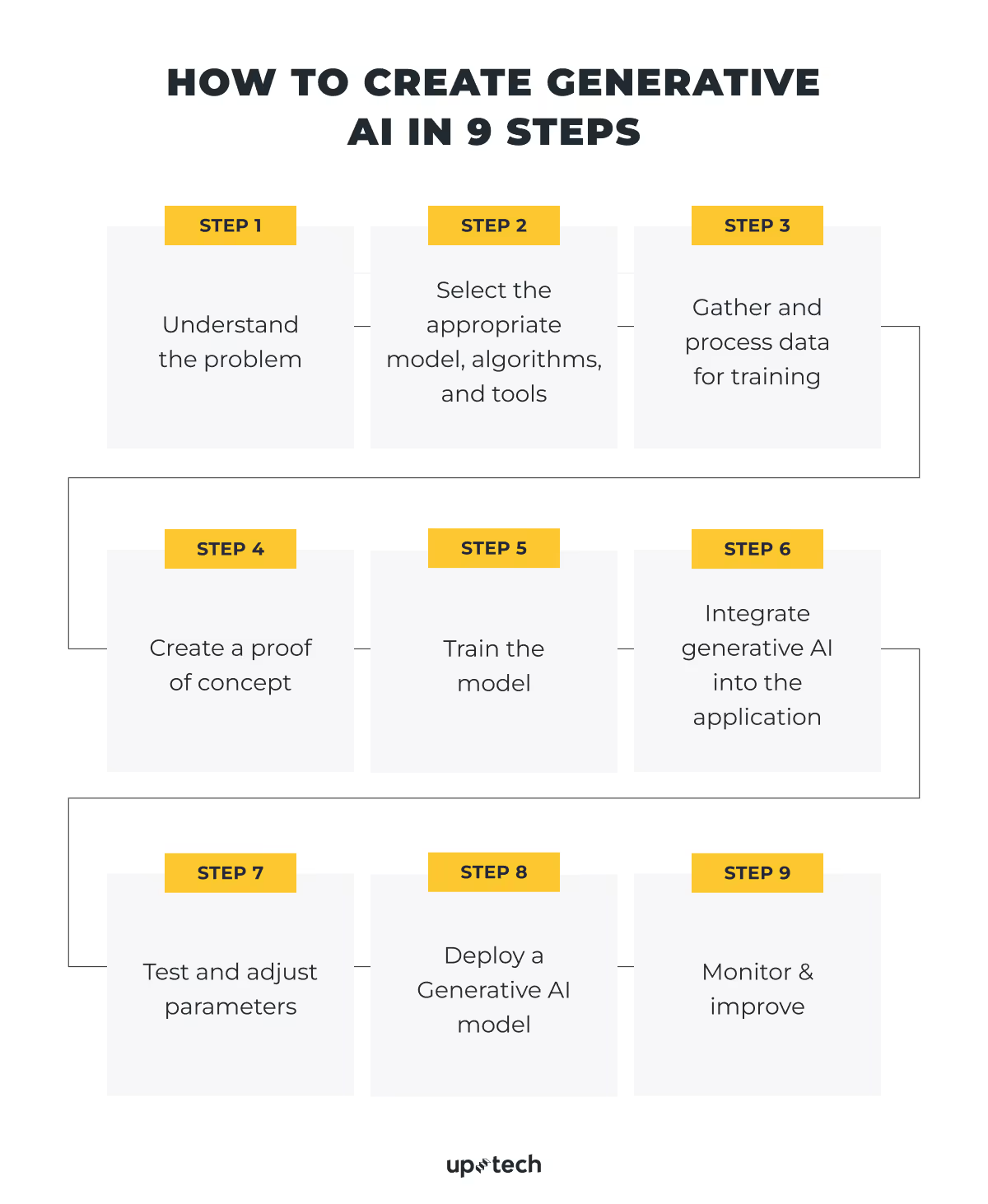

How to Create Generative AI in 9 Steps

Like it or not, generative AI is here to stay and will disrupt various industries in the near future. However, you can make the technology work in your favor by building your own genAI solutions. At Uptech, we've helped companies develop generative AI capabilities from scratch, and here's how we do it.

Step 1. Understand the problem

As powerful as they are, generative AI models only make sense when they can solve the right problem. Therefore, we'll first need to determine the exact problems you're trying to solve. For example, a public speaking training company might need an AI system to turn ideas into speeches. This differs from a video upscaler, which uses a computer vision model to reproduce old videos in high-quality formats.

Uptech pro tip: Create a realistic performance baseline, which helps benchmark the model's actual performance when deployed. It allows you to manage expectations and suggest further improvements down the line.

Step 2. Select the appropriate model, algorithms, and tools

Depending on the application's purpose, we must assemble the relevant software tools, foundational model, and tech stack. It's also helpful to set up a pipeline that allows developers to train, fine-tune, test, and deploy the model collaboratively.

Another key decision at this point is to decide whether to use a pre-trained model or train a model from scratch. We’d like to explain the differences between these two approaches.

Using pre-trained models

Pre-trained models are already trained on huge datasets: They often combine billions of text, images, or other examples. They know how to recognize patterns, language, or images out of the box.

Fine-tuning a pre-trained model for a specific task typically requires a smaller dataset, sometimes just a few thousand to tens of thousands of examples, and a lot less computing power.

This approach can speed up development by months and cut costs dramatically because much of the heavy lifting has already been done.

Training generative AI from scratch

Training generative AI from scratch, on the other hand, means building a model with no prior knowledge. It requires massive datasets, often millions of high-quality examples, and significant computational resources to work.

Companies need cloud infrastructure and a dedicated team of data engineers, ML engineers, data scientists, and other specialists to handle training, tuning, and troubleshooting. Costs can climb into hundreds of thousands of dollars, and timelines often stretch over many months if not years.

Hardware is another major consideration. GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units) are designed to handle the huge volume of calculations that AI models require. Without this specialized hardware, training even a modest model could take weeks instead of days. As a result, a company must expect higher cloud bills and project delays.

As for the tech stack, we at Uptech commonly use these technologies and frameworks to develop generative AI software from scratch.

- PyTorch and TensorFlow. Both are popular machine learning frameworks that developers use to build and train machine learning models. We use these frameworks to debug and observe the model's behavior in different operating environments.

- Jupyter Notebook is an interactive platform our team uses to prototype AI applications. It consists of interactive features, allowing us to run and test small code units and get immediate results.

- HuggingFace provides open-source machine learning resources, such as pre-trained large language models and datasets for building AI software. It helps develop AI applications for natural language processing tasks like sentiment analysis and named entity recognition.

- Pandas is an open-source library by PyData that helps AI developers work with data structures. It comprises features that enable data manipulation, analysis, visualization, and more when building generative AI apps.

- OpenCV contains resources and libraries that help us build computer vision AI applications. For example, it provides functions enabling developers to implement object detection, feature extraction, and depth estimation.

- Scikit-learn is a Python-based framework that provides end-to-end machine learning development capability. It supports a wide range of machine learning tasks, such as regression, classification, and model selection.

- Cloud infrastructure and tools like AWS, Terraform, and GitHub allow us to build a gapless generative AI development workflow on the cloud.

Step 3. Gather and process data for training

So, whether you opt for the downloadable AI models like Hugging Face or decide to play big and build a generative AI model from scratch, the need for business-specific datasets is here. To do this, we first collect data from different sources. We also make sure the collected data is relevant and matches the goals of the project. Raw datasets often need serious preparation before training can even start.

Note: In practice, data exploration and analysis sometimes come before the final model choice, since the type and volume of data can influence which models are appropriate for the task.

Usually, it’s data evaluation that comes as a first step. We review the collected data to check if it’s complete, consistent, and suitable for the task at hand. Poor-quality data can easily mislead the model and cause major performance issues later.

Then we move to cleansing. The team removes duplicates, fixes incorrect entries, fills in missing values when possible, and eliminates any irrelevant information. Clean data prevents the model from learning from mistakes or noise.

Once the data is clean, it must be normalized. Normalization brings all data into a consistent format:

- Conversion of the text to lowercase

- Standardization of numerical ranges

- Categories alignment, etc.

Without normalization, the model might misinterpret small technical differences as meaningful patterns, which weakens the final results.

During the data preparation stage, our annotators often work closely with domain experts to create labeled datasets, especially when it comes to the use of supervised machine learning approaches. Annotators assign correct tags or categories to each data sample. For instance, they may mark images by object type or tag text by sentiment. High-quality labeling is a must because it directly teaches the model what output to predict based on the input.

For example, when preparing textual datasets, we not only tokenize the text and perform part-of-speech tagging, but also label specific entities or categories based on the project's needs. These detailed steps help build structured, trustworthy training data that leads to more reliable AI models.

Step 4. Create a proof of concept

The good practice when it comes to building generative AI solutions is to start with a proof of concept (PoC) rather than integrating a fully trained model into software. It means that we use a simple model capable of demonstrating basic capabilities to get feedback from the target audience.

Note that the model may not produce the desired performance, but it allows you to test the idea and gather feedback from real users.

Step 5. Train the model

Next, we thoroughly train the model. We feed the AI model with the datasets prepared earlier so that it can learn specific patterns, which it later uses to solve business problems. Generative AI models use self-supervised and semi-supervised learning methods to train. Human involvement is essential to fine-tune and align the model's scope, accuracy, and consistency with the business objective. For that, we evaluate the model's performance with recall, F1 score, and other metrics.

However, as we said earlier, this is a very expensive step. When we identify that a pre-trained model would work with only minor fine-tuning, and this aligns with the client's expectations, we typically go with this more cost-effective route.

Step 6. Integrate generative AI into the application

Once the model reaches the required level of quality and performance and meets the client’s expectations, we incorporate the generative AI model into the application. This involves hours of coding, integration, and testing various app functions in different environments. At this point, it’s super important to consider things like scalability, data security, and error handling. Understandably, generative AI systems require immense computing power, and we might recommend shifting to GPU or TPU-based machines.

Step 7. Test and adjust parameters

A deep learning model consists of many hyperparameters, which impact its performance. For example, the number of layers, hidden units, and dropout rate may cause overfitting or underfitting issues when misconfigured.

Therefore, we run thorough tests to make sure the model performs optimally. If needed, we apply hyperparameter optimization techniques such as random search and grid search to obtain the best performance.

Testing goes beyond just adjusting technical settings. We also track basic performance metrics like accuracy, precision, and recall to measure how well the model handles real-world data. Depending on the application, we might monitor output quality, response time, or error rates as additional checks.

User feedback often plays an important role, too, especially for customer-facing applications. By analyzing user behavior and collecting feedback, we can catch patterns that pure testing might miss. These insights help refine the model in practical, real-world conditions.

Keep in mind that testing and tuning are not one-time activities. They form an ongoing, iterative process where we continuously evaluate results, make adjustments, and push the model toward better performance over time.

Step 8. Deploy a generative AI model

We then deploy the AI application on the public cloud or on-premise infrastructure.

We practice a strong DevOps culture and maintain a robust CI/CD (Continuous Integration/Continuous Deployment) pipeline to ensure you always have a production-ready build. CI/CD allows us to automate testing, integration, and deployment, so updates and improvements reach production faster and with fewer errors.

Before deployment, we package the AI application using tools like Docker for containerization. Containerization means that we bundle the code, model, and all necessary dependencies into one lightweight unit. As such, it’s possible for the app to run consistently across different environments, whether it's a developer’s laptop, a test server, or the production cloud.

For larger systems, we use orchestration platforms like Kubernetes. Kubernetes manages multiple containers across clusters of machines, automatically handling scaling, load balancing, recovery from failures, and smooth rollouts of updates. It helps keep the AI application responsive and resilient, even as usage grows.

Choosing between cloud and on-premise deployment depends on the project’s needs:

- Cloud deployments (AWS, Azure, GCP) offer flexibility, easy scaling, faster setup, and access to powerful hardware like GPUs and TPUs. However, they come with ongoing costs and raise concerns about data privacy for sensitive industries like healthcare or finance.

- On-premise deployments give you full control over security, compliance, and system customization. They can also help lower long-term operational costs for large-scale, stable workloads. The downside is the high upfront investment in infrastructure, longer setup times, and the need for an in-house team to manage the environment.

Step 9. Monitor and improve

Deployment is not the final step, it’s the beginning of a continuous cycle of monitoring, maintenance, and updating to keep the AI application effective as business needs evolve.

Our team continues to provide post-release support and evaluate the model's performance in real-life use cases. We look for bottlenecks and instances where the model fails to analyze or produce relevant outputs with real-world data. Then, we run further tests, refine the model, and revise the application. At the same time, our team studies user feedback to ensure the app has a good product-market fit.

Additional Tech Considerations when Building GenAI Models

Modern generative AI solutions involve more than just model selection. Successful products require robust operational practices and a clear focus on ethical, compliant deployment from the beginning. That’s why we'd like to point out a few more things regarding generative AI development.

MLOps and the role of operational AI

MLOps (Machine Learning Operations) introduces proven DevOps practices into AI workflows.

Its core task is to ensure that generative AI models are trained, tested, deployed, monitored, and updated systematically to maintain performance over time.

Key elements of MLOps include:

- Versioning datasets and models to track changes and support reproducibility.

- Monitoring model performance to detect drift or sudden drops in accuracy.

- Automating retraining when performance metrics fall below acceptable levels.

Can you do without it? Technically, yes. However, without a strong MLOps process, AI models risk becoming outdated, misaligned with business goals, or even non-compliant with regulations. Good MLOps practices, on the contrary, allow teams to keep AI products reliable, secure, and adaptable to new data and evolving user needs.

Governance, compliance, and responsible AI use

As AI capabilities grow, so do the responsibilities around how models are trained, deployed, and monitored. Whoever gets into generative AI development needs to know how to manage risks around bias, privacy, and ethical use. Otherwise, business success is in question.

AI bias and how to address it

AI models often reflect the biases present in their training data. If left unchecked, this can lead to unfair or harmful outputs.

For example, let’s say you use a generative AI model to help the HR department automatically summarize candidate applications. If the training dataset includes resumes that overwhelmingly highlight male applicants in leadership roles and female applicants in support positions, the AI could start producing biased summaries.

It might unintentionally downplay female candidates' achievements or emphasize less relevant details, simply because of the patterns it learned from the data.

To address AI bias:

- Curate diverse and representative training datasets,

- Conduct regular bias and fairness audits,

- Introduce human review steps for sensitive AI applications, such as hiring, lending, or medical diagnosis.

Bias management is not a one-time effort either. It requires continuous evaluation as new data becomes available and new use cases emerge.

Data privacy and regulatory compliance

Generative AI products often handle sensitive user data. Due to this, data privacy is a central concern. Laws like HIPAA, GDPR, and CCPA impose strict rules on how companies collect, store, and use personal information.

When we develop generative AI solutions, we always stick to the following practices for privacy and compliance:

- All sensitive data is anonymized and encrypted.

- Explicit user consent collection before we use personal data.

- Setting clear access controls and data retention policies.

- All audit logs must be kept to demonstrate compliance in case of regulatory review.

Failure to comply can lead to heavy fines, reputational damage, and user distrust.

Ethical AI use

To promote ethical AI usage:

- Add disclaimers when generated content could contain inaccuracies.

- Clearly educate users about the AI system’s capabilities and limitations.

- Provide users with feedback channels to report issues or harmful outputs.

Ethical AI is not only about protecting the company legally, it strengthens user trust and builds a competitive advantage. With new regulations like the EU AI Act setting strict requirements for transparency, fairness, and accountability, responsible AI practices are no longer optional.

Importance of strong data governance

Data governance defines how information is collected, verified, stored, shared, and retired throughout the AI system's lifecycle.

Strong governance practices ensure:

- Consistent quality standards across all datasets,

- Clear ownership and accountability,

- Full transparency on how AI decisions are made and which data influences outcomes.

Good governance enables faster scaling, better compliance, and higher-quality AI outputs.

Now that we've completed the more technical aspects of generative AI model development and deployment, let’s take a closer look at the business opportunities that have already emerged and may emerge soon.

A Glance at the Generative AI Market, Benefits, and Drawbacks

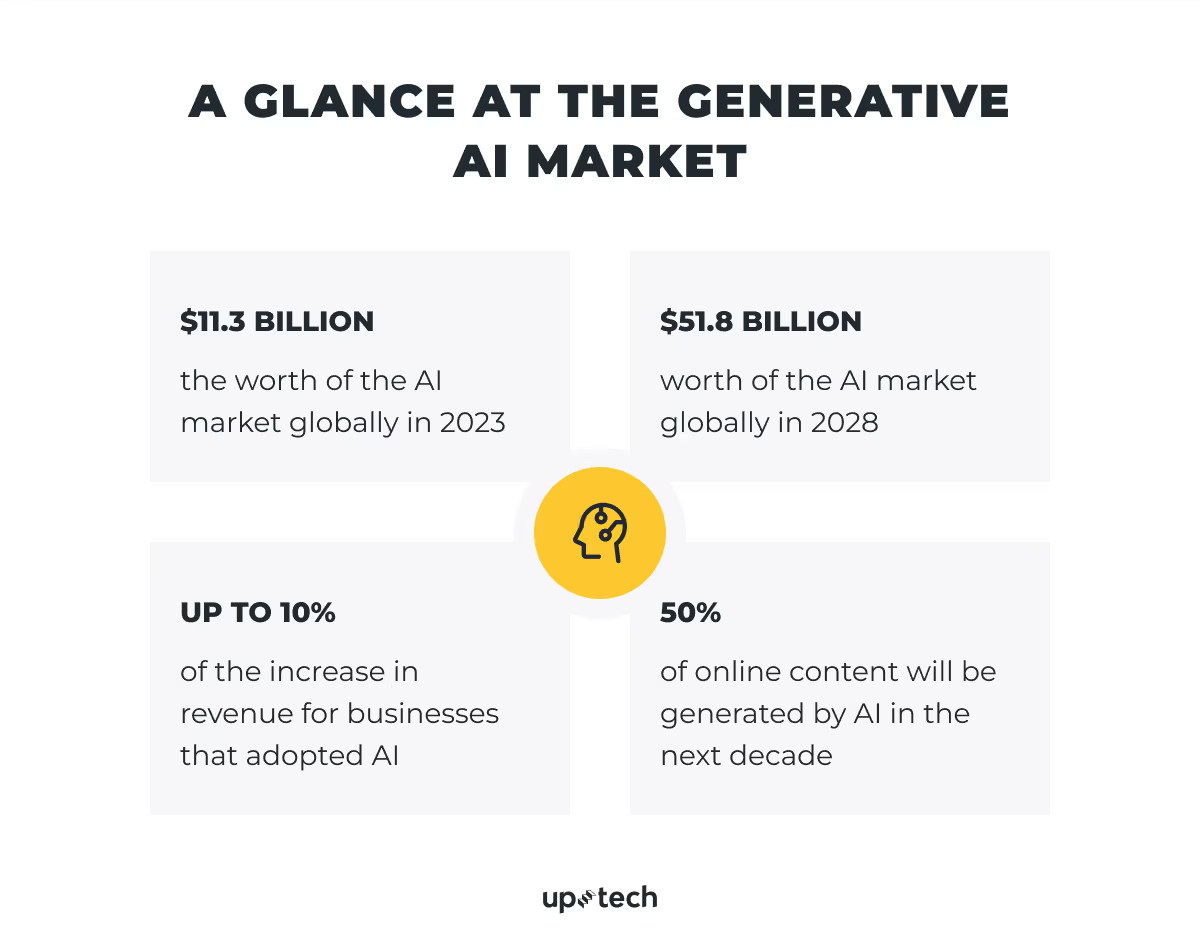

Financially, businesses have a huge incentive to embark on the generative AI train, whether as a provider or user. Studies revealed that the AI market is worth $11.3 billion globally. The figure is predicted to grow 35.6% over the next few years to $51.8 billion in 2028. Moreover, businesses that adopted AI in their workflow have demonstrated up to a 10% increase in revenue.

Even as we pen our thoughts, the generative AI space continues to grow rapidly. New large language models are being released and trained with billions of parameters. Everyday users are impressed by tools like MidJourney that can produce vivid, lifelike images, while marketers increasingly rely on advanced models like GPT-5 to create engaging content.

Here are some interesting insights on where the generative AI market is heading.

- The COVID pandemic has fueled the adoption of generative AI solutions, with major players like AWS, Google, and Microsoft taking the lead.

- Both diffusion and transformer-based neural networks continue to drive emerging AI systems.

- AI-generated content, including text and images, will make up 50% of online content in the next decade.

- With companies like OpenAI, Nvidia, and Google at the fore, the US continues to lead the generative AI market. At the same time, China is quickly closing the gap, releasing its own large language models such as Deepseek AI.

- Wide adoption is expected in various industries, including AR/VR, healthcare, retail, cloud computing, and media.

All in all, there is optimism in the air about how one of the most exciting technologies in modern times will stamp its mark on our lives.

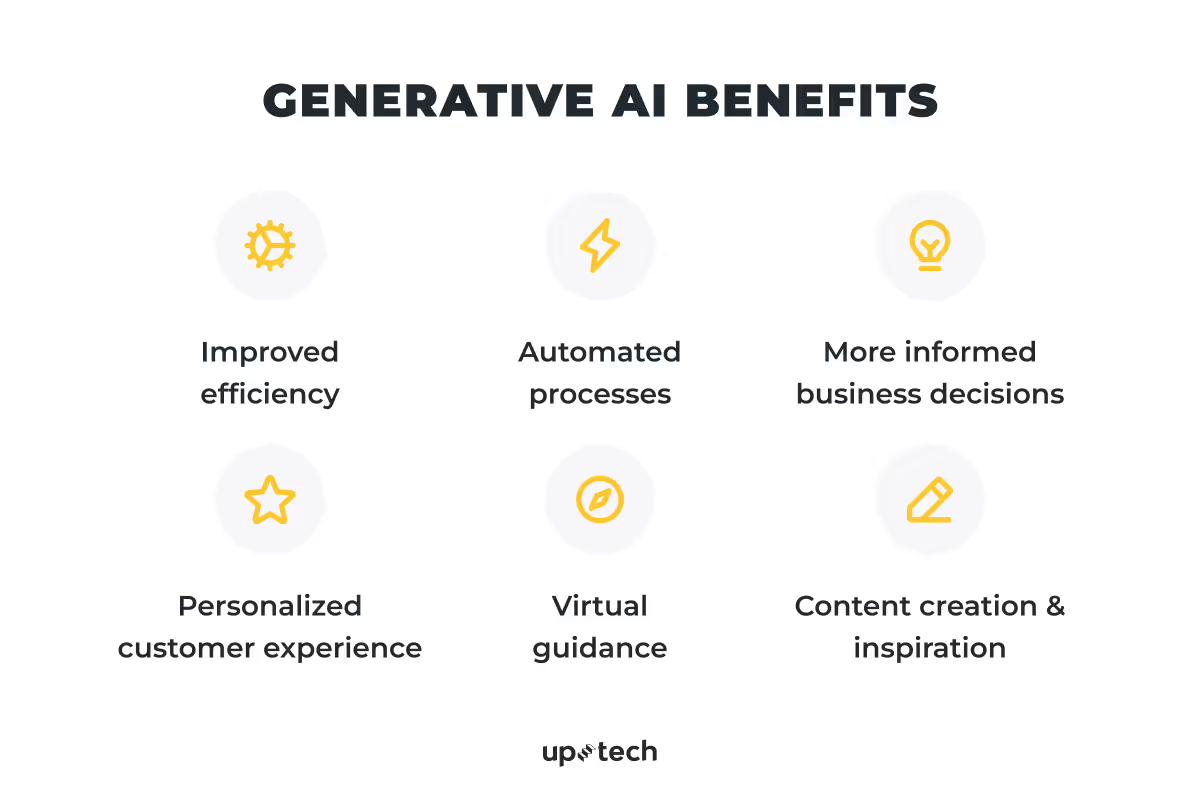

What are the benefits of generative AI?

Beneath the buzz brought upon by ChatGPT, there are many generative AI opportunities and benefits of these advanced machine learning models bring to real-life applications. I share several notable examples below.

Improved efficiency

Generative AI immediately impacts business productivity by allowing professionals to perform repetitive tasks quickly. For example, AI software can help marketers create a marketing plan in seconds, a process normally taking hours. This way, you can allocate your resources to other creative or people-intensive tasks.

Automated processes

Many businesses are streamlining their workflow to enable better employee collaboration. Generative AI automation allows companies to access robust AI capabilities and deploy them on existing enterprise solutions.

More informed business decisions

At the core of generative AI is a multilayer neural network capable of processing vast amounts of data. Businesses can leverage the AI engine to support decision-making by reducing costly oversights.

Personalized customer experience

Pre-trained generative AI models can be further fine-tuned with information on your products and services. This drives AI personalization at scale, letting you engage customers with automated, relevant, and tailored responses that enhance their journey.

Virtual guidance

Generative AI opens up the possibility of smart AI trainers for the public. Businesses can train the model in various disciplines to help users explore new areas of interest.

Content creation and inspiration

Language models like GPT are trained with large amounts of text. They can write poetry, creative stories, and quotes, and perform other tasks to help content creators with imaginative works.

What are the drawbacks of generative AI?

While industries are raring to adopt generative AI, there are still concerns about its usage.

- Generative AI consists of large, complex models that are computationally intensive to train and operate. For example, the GPT-4 is believed to have more than 1 trillion parameters. This makes the model extremely difficult and expensive to train. Moreover, pre-trained models require further fine-tuning with human feedback before they are fit for specific use cases.

- There are legitimate concerns that generative AI applications will replace the traditional workforce. Companies on a tight budget are already using AI to fill certain roles. Like all revolutionary technologies, generative AI will also create new opportunities, but the full implications remain to be seen.

- While AI can mimic humans, it might err when producing the output. We call this phenomenon 'bias', where the AI demonstrates prejudice or incorrectness from the data it was trained on. For example, an AI credit scoring system might reject a loan application simply because it does not have enough data on specific demographics.

How Generative AI is Changing Industries

The term generative AI might be new to many consumers, but the technology is already impacting various industries. Corporate leaders are using the deep learning model's capability to canvas large amounts of data and provide logical responses.

Fintech

Banks and alternative financial service providers leverage deep learning models like GANs for credit scoring and fraud detection. These models learn from vast financial data to pick up fraudulent transactions, spending patterns, and other credit factors. Then, these models are integrated into the bank's computer systems to automate and safeguard financial transactions.

Healthcare

In hospitals and medical establishments, generative AI strengthens efforts to improve patients' well-being in different ways. For example, generative learning models allow medical experts to recreate imaging data into realistic 3D forms. Such systems can also analyze patients' diagnoses, allowing medical workers to focus on delivering better care.

Real estate

Real estate agents seek better ways to market properties. They use generative AI solutions, such as Jasper, to create marketing copy. Some turn to AI visual design software to reproduce realistic property and interior design photos. There are also advanced AI software programs capable of producing a floor plan from a textual description.

E-commerce

Generative AI helps businesses improve e-commerce revenue growth in several ways. It helps generate appealing product descriptions and images for the online store. For example, marketers use Dyvo to create studio-quality product photos for e-commerce stores. Generative AI also enables online retailers like Amazon to generate personalized recommendations, which increases sales value per transaction.

Education

Generative AI changes how people learn and consume knowledge on the internet. Instead of subscribing to a fixed syllabus, they learn from personalized lessons that educators prepare with AI solutions. Moreover, AI allows teachers and coaches to assess students in real-time by analyzing assignments and summarizing the results.

Legal

Lawyers are burdened with paperwork and research, and benefit from the robust capabilities of generative AI. Instead of searching for precedents or specific legal terms in archived documents, they can use AI to do the heavy lifting and concentrate on the creative parts of building the case.

Manufacturing

Manufacturers use generative AI systems to offer more predictability in logistics, market trends, and product demands. Advanced machine learning models can provide insights from real-time data to prepare manufacturers for changing market dynamics.

More Uptech’s Tips to Help You Build State-of-the-Art GenAI

Learning how to build AI software gives you some clarity on the work involved. Still, there will be unanticipated risks and generative AI challenges that could undermine your efforts. Our team has gone on the path and picked up valuable experiences that might help.

Here are some helpful pointers.

- Identify specific parts of your business process or solutions that would benefit significantly from generative AI. Not all features need an upgrade, particularly with expensive AI technology. Instead, focus on changes that create the greatest impact for your users.

- Prioritize data protection to safeguard users' data and meet regulatory compliance. Training and operating generative AI involves moving large amounts of data around. Use protective measures, such as encryption, to reduce data risks.

- Generic AI software like ChatGPT might amaze the public, but they are difficult to use for specific business use cases. Furthermore, they are incredibly expensive to train. Hence, developing smaller but targeted AI models or fine-tuning pre-trained models makes better sense.

- The training dataset's quality greatly influences model performance. Focus on preparing high-quality datasets to reduce performance issues like bias and over-generalization.

- Despite rigorous training, generative AI is not perfect. Therefore, it's important to state its limitations to your users. For example, OpenAI disclaims that ChatGPT may return incorrect and biased responses.

Talk to our team to learn more about turning your generative AI ideas into market-worthy solutions.

How Much Does it Cost to Build a Generative AI Solution?

The thought of developing generative AI software raises the question of affordability. Notably, training and deploying the model itself is a costly process. Add the standard development works, and you wonder if you might be staring at a hefty bill.

Yet, is AI development that expensive?

It depends on what you're trying to build and the solution's complexity. Unless you're making a generic AI tool like ChatGPT, you won't be paying astronomical sums to release an AI-powered app.

That said, remember that the cost estimate depends on other factors, too, such as training requirements, app features, and the nature of the application. Let’s look at the approximate estimates of the generative AI development based on the different influencing factors.

Cost by engagement model

The pricing structure often depends on how you choose to engage with your development team. Common cooperation models include:

- Fixed-price models

- Time and material

- The dedicated team setups

Cost by project complexity

The overall cost heavily depends on the product's complexity. Projects that require custom model training, complex features, or integration with external systems naturally require more time, effort, and budget.

For context, our team can produce a proof of concept (PoC) within 3 months while keeping the price as low as $ 20,000.

Keep in mind that these estimates are ballpark figures. The actual cost can vary depending on the use case, team structure/seniority, and the specifics of your business goals.

Overall, building a generative AI solution is a complex journey that requires choosing the right model, preparing quality datasets, training and fine-tuning, and then deploying and monitoring the system over time. Businesses usually face two options:

- In-house development. This gives full control but requires a large expert team, significant infrastructure, and high costs.

- Outsourcing development. This one allows startups and growing companies to move faster, tap into seasoned expertise, and save costs by not reinventing the wheel. With the right partner, you reduce risks and accelerate time-to-market.

At Uptech, we help companies to overcome this complexity with generative AI services:

Consulting and discovery. We help define business goals, validate ideas, and select the right AI models and strategy for your needs.

Proof-of-concept development. Within 3–8 weeks, we build and test a PoC so a client can validate assumptions, measure performance, and make confident decisions.

Full development and integration. We take care of data governance, model fine-tuning, and integration of generative AI features into existing software.

AI/ML engineers on demand. In need of extra talent, clients can scale their team with our experienced engineers.

If you’re considering outsourcing instead of building in-house, explore our generative AI services in detail or reach out for a free consultation.

.avif)