EU AI Act Readiness is no longer optional; it’s the next big milestone for any company that builds or wants to build and use AI in the European market. On February 2, 2025, the EU officially put the EU Artificial Intelligence Act (EU AI Act) into effect: It introduces a new set of legal obligations for anyone working with AI, be it startups, freelancers, or large tech companies.

According to Gartner, more than 60% of CIOs had already included AI in their strategic plans by the time the Act took effect. Many of the same organizations had been working to protect EU residents’ personal data under GDPR. Now, with the AI Act in place, they face an additional layer of requirements specifically tied to how AI systems are developed and used.

I’m Oleh Komenchuk, ML Department Lead at Uptech. Over the past eight years, my team and I have helped businesses bring AI-powered features to life across industries, platforms, and use cases. In this article, I explain what the EU AI Act readiness assessment means in practice, how it affects software development, and what steps to take to stay compliant.

What is the EU AI Act?

The EU Artificial Intelligence Act (EU AI Act) is the world’s first comprehensive law focused entirely on artificial intelligence. It consists of 11 chapters and 99 Articles. The goal is clear: to encourage the safe, transparent, and human-centric use of AI across the European market. Instead of regulating AI one feature at a time (like GDPR does), the Act takes a broader approach. It classifies AI systems based on the level of risk they may pose and ties legal obligations to those risk levels.

Officially adopted in March 2024, the EU AI Act’s first rules entered into force on February 2, 2025, with other obligations rolling out gradually over the next two years. Most obligations will take effect by August 2026. This staggered timeline gives companies some space to adjust, but the clock is already ticking for anyone working with AI.

The EU AI Act lays out the following:

- Rules for placing on the market and using AI systems in the EU

- A list of prohibited AI practices that pose unacceptable risks

- Strict requirements for high-risk AI systems, including obligations for developers and deployers

- Transparency rules for certain AI systems, especially those that interact with users

- Specific rules for general-purpose AI models

- Guidelines for market monitoring and enforcement

- Support measures for innovation, especially for startups and small businesses

So, what does this new regulation mean to businesses?

How exactly EU AI Act affect businesses

The companies that want to develop or use AI in the EU face new responsibilities, from how they design their system to how they bring it to market and monitor it after launch. The parties involved are providers, importers, deployers, distributors, product manufacturers, and authorised representatives.

The AI Act doesn’t just affect the tech: it also reshapes internal processes. Businesses must review their product strategy, legal workflows, and team structure to meet new obligations. Roles may shift, timelines may stretch, and accountability now spreads across multiple stakeholders, from product managers and ML engineers to legal advisors and executive leadership.

If the company fails to comply with the EU AI Act, there will be penalties. The most serious violations can result in fines of up to €35 million or 7% of your company’s global annual revenue, whichever is higher.

That’s why it’s important to know where your software fits and what applies to avoid the fines, since not every AI system falls under the same rules. The Act defines different categories based on how much risk a certain system poses.

Types of Risks According to the EU AI Act

The EU AI Act sorts AI systems into four risk categories: those with unacceptable risk, high-risk systems, AI software with limited risk, and minimal risk systems. Each category comes with a different level of legal requirements, and for some, a hard stop.

Let’s take a closer look at each category.

Unacceptable-risk AI systems

Article 5 of the EU AI Act lists and explains what AI systems are banned entirely. The EU considers them too dangerous due to their potential for serious harm.

This includes AI systems that:

- Manipulate behaviour using subliminal, deceptive, or manipulative techniques that impair decision-making and cause significant harm.

- Exploit vulnerabilities (e.g., age, disability, social/economic status) to distort behaviour and cause harm.

- Perform social scoring, evaluating people based on behaviour or personality traits, leading to unjustified or harmful treatment.

- Predict criminality solely through profiling or personality analysis.

- Build facial recognition databases by scraping images from the internet or CCTV without consent.

- Infer emotions in workplaces or educational settings, unless for medical or safety purposes.

- Categorize people biometrically to infer sensitive attributes like race, political views, or sexual orientation.

- Use real-time remote biometric identification (RBI) in public spaces for law enforcement unless under very strict conditions.

If a product uses any of these, it cannot be placed on the EU market.

High-risk AI systems

This category includes AI used in sensitive areas like recruitment, education, healthcare, and policing. They are explained in Articles 6-15 of the Act.

If your product uses AI in one of these sensitive areas, the law requires more than just safe design; it expects a full compliance process before and after the system goes live. Here’s what that means in practice:

- Set up a risk management system. You need to assess, document, and manage potential risks at every stage of your system’s lifecycle, from design and testing to deployment and monitoring. (Article 9)

- Apply solid data governance. Your system must rely on high-quality, relevant, and representative data. You also need to detect and reduce bias, especially when data influences decisions about people. (Article 10)

- Prepare detailed technical documentation. Documentation should clearly describe how the system works, what it does, and how it meets the legal requirements. This must be ready before the product reaches the market. (Article 11)

- Keep records of system activity. The system must automatically log events to support traceability and accountability. This helps with investigations, audits, and performance reviews. (Article 12)

- Provide transparency to deployers. You must give clear instructions, capabilities, and limitations of the AI system to those who use or integrate it, so they understand how to use it properly. (Article 13)

- Enable human oversight. People must be able to detect when the AI behaves unexpectedly and have the power to intervene, shut it down, or override its decisions if needed. (Article 14)

- Ensure accuracy, robustness, and cybersecurity. The system must be reliable under normal and adverse conditions. It must also be secure against manipulation, tampering, or unauthorized access. (Article 15)

Before you place a high-risk AI system on the EU market, you must complete a conformity assessment. This is a pre-launch check that proves your system meets the Act’s requirements.

If you fail to comply, you will face legal risks, delay your go-to-market timeline, create trust issues, or incur penalties. Does your product fall into this category? It’s important to align your development process with these expectations early on.

Limited-risk AI systems

AI systems in this category involve some interaction with users but don’t fall under high-risk or prohibited use. A common example is an AI chatbot or virtual assistant that simulates human conversation. While these systems aren’t heavily regulated, they still come with a few obligations.

The most important one is disclosure. If your AI interacts with people in a way that could be mistaken for a human, you must clearly inform users that they are dealing with a machine. This applies to chatbots, recommendation engines, and other systems that influence choices or behavior.

The Act also encourages companies to follow voluntary codes of conduct to promote transparency, fairness, and accountability. While not mandatory, these practices can help build user trust and reduce the risk of future regulatory issues.

For companies that work with customer-facing AI, it’s a good idea to document how your system works, which data it relies on, and how you handle user consent, even if you’re not legally required to do so yet.

Minimal- or no-risk AI systems

This category covers the lowest-risk AI tools, which typically support convenience or automation and don’t affect people’s rights or safety. These are, for example, spam filters, AI used in video games, grammar correction tools, or route optimization in logistics. These systems don’t require special approvals or documentation under the EU AI Act.

For most businesses, these tools fall outside the scope of the law’s main obligations. However, the Act still encourages developers to follow responsible design principles, such as

- Keep the system explainable,

- Maintain high data quality,

- Build safeguards against misuse, among other things.

These actions need to be taken if developers plan to scale or repurpose such tools for new use cases.

Even if your AI system is currently considered low risk, it’s worth reviewing where and how it fits in your broader product strategy. Future updates or integrations may trigger higher compliance requirements down the line.

These requirements might look a bit overwhelming, but fret not, in the next section, I will guide you through the key steps to prepare your AI system for EU regulations.

EU AI Act Compliance Checklist: How to Prepare

Okay, if you're working with complex AI systems or handling personal data, the EU AI Act puts a lot of work on your shoulders. That’s true. At the same time, you can manage a process better and reduce the risk of mistakes that could delay your launch or trigger penalties with a structured approach.

This checklist will help your company prepare. You can find more detailed guidance on the European Commission and European Parliament websites.

Assess your AI system's risk level

First things first, you must identify which risk category applies to your product. If you work in high-impact areas like healthcare, finance, or public safety, expect stricter rules and additional approvals.

Document your system clearly

Keep detailed, up-to-date records of datasets, algorithms, model decisions, and system logic. Clear documentation helps with audits, builds customer trust, and keeps you prepared for future changes. For example, documentation can come in handy in case you switch to a higher-risk category.

Check your data for bias and quality

Make assessments of your training data to detect bias or patterns that could lead to unfair outcomes. Set up regular reviews and put tools in place to spot and fix issues early.

Secure your system

Build strong safeguards against tampering, cyberattacks, and data leaks. Security isn't just a legal requirement; it’s essential for maintaining customer trust.

Plan for conformity assessment

If your product is high-risk, you’ll need a formal review by an approved body before your product goes to market. Look into recognized conformity assessment bodies and assign internal resources to support the process.

Train your team and update internal processes

Everyone involved in AI development, deployment, or sales should understand the basics of the Act. For this, it’s important to offer targeted training and update your internal policies to reflect new compliance workflows.

Appoint an AI Officer

The helpful practice is to designate a person responsible for coordinating your compliance strategy. This role should act as a bridge between your technical team, leadership, regulators, and certifiers. The AI Officer should oversee documentation, risk evaluations, and long-term compliance planning.

In smaller teams, though, it doesn’t have to be a separate full-time position: It can be an additional responsibility for someone already involved in product or legal decision-making.

Following these steps puts your company on solid ground, not just for legal compliance, but for building AI products that are trusted, responsible, and ready for the EU market.

How Uptech Can Help You with EU AI Act Readiness Assessment

As said, the EU AI Act introduces a few new rules for companies that build or use AI systems. To meet these requirements, you need to assess your product, identify risks, and make sure the right processes are in place. This is where a proper readiness assessment becomes essential.

At Uptech, we know what it takes to develop AI solutions under strict legal and technical conditions. We’ve worked with companies in highly regulated industries like healthcare and finance. While the AI Act is new, many of its core principles already shaped the products we've helped build.

What our readiness assessment includes

- Risk classification. We help define which risk level applies to your AI system and explain what obligations that brings.

- System evaluation. We review your AI models, data flows, and decision-making logic through the lens of legal requirements.

- Compliance gap check. We can highlight missing documentation, weak points in your process, or areas that may trigger non-compliance.

- Governance setup. We help you establish human oversight procedures and clear user disclosures where needed.

Equipped with this, you have a clear understanding of your current state, a list of action items to address compliance gaps, and a path towards full alignment with the EUAI Act.

What Uptech has already done in regulated environments

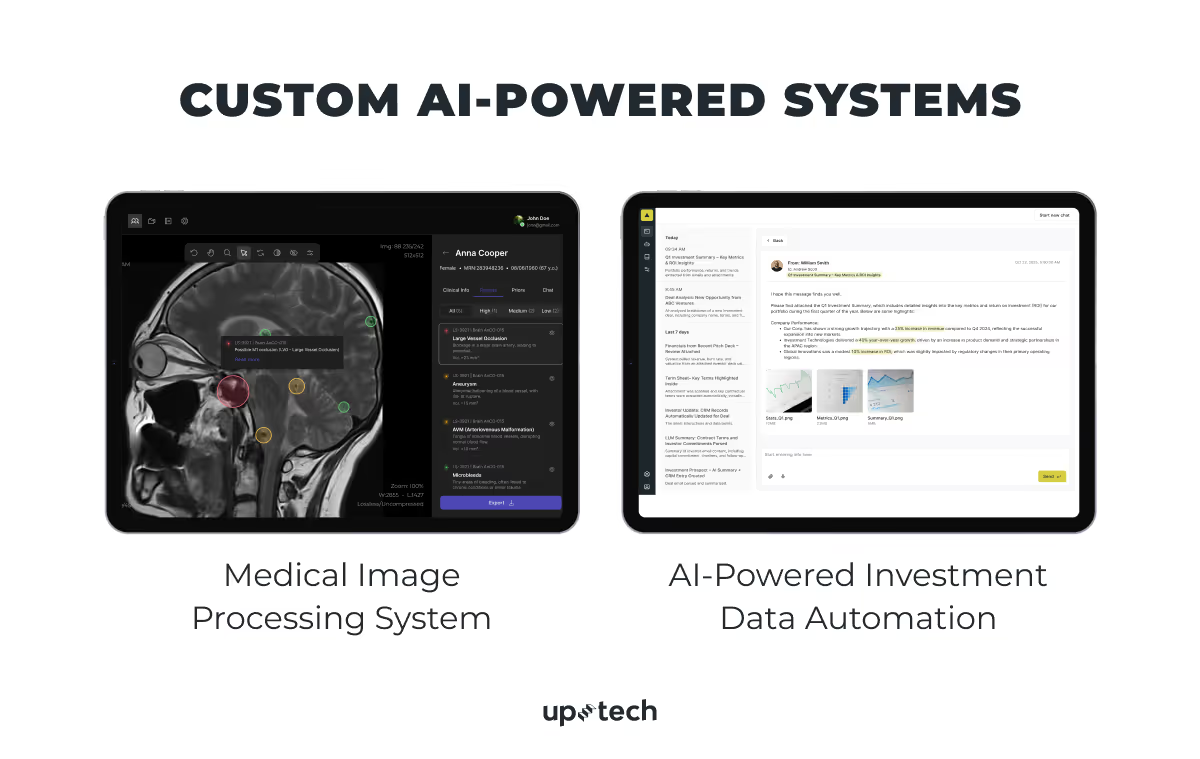

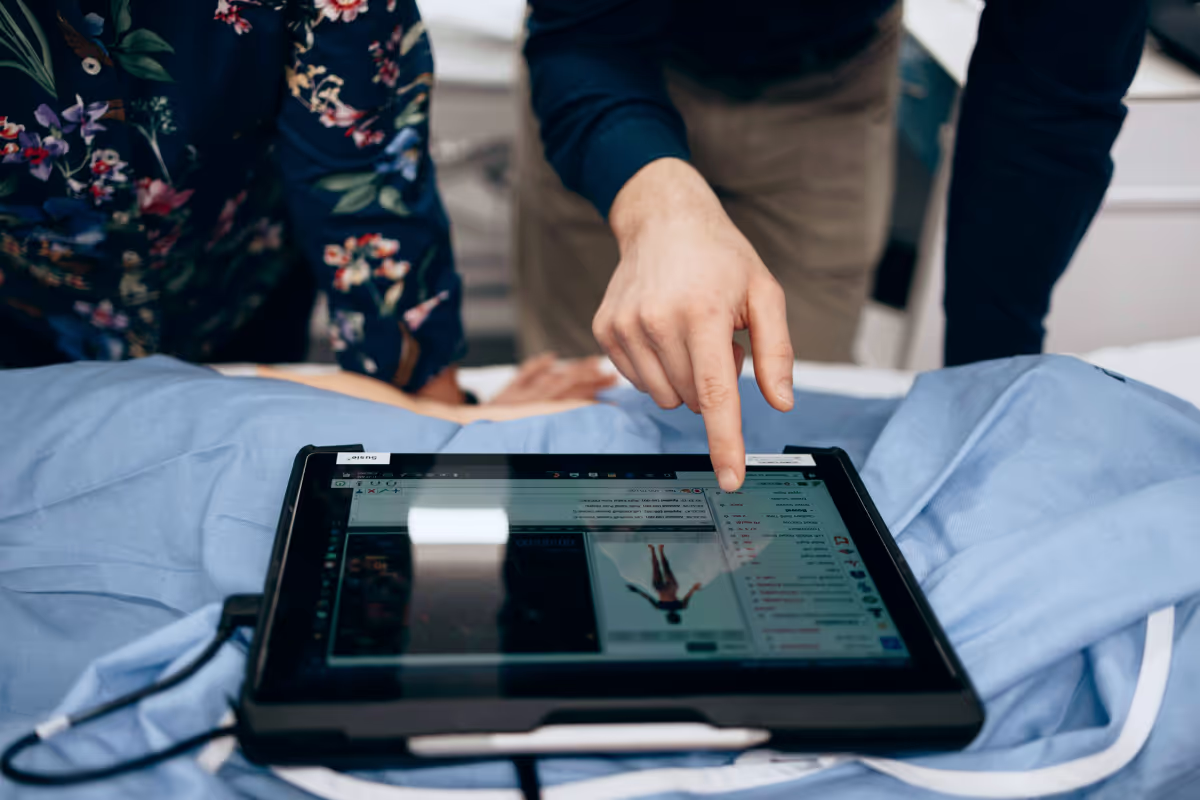

We built an AI-based system for medical image processing that reduces diagnostic time by up to 30%. It uses models like U-Net, DeepLab, and EfficientNet to automate image classification, segmentation, and anonymization. The system meets HIPAA and GDPR requirements and supports both 2D and 3D scans.

Our team also helped an investment firm automate financial data handling with AI. Our solution extracts and structures data from investment documents and uploads it directly into their CRM. The product reduces manual work, improves accuracy, and fits within strict internal compliance standards.

These two cases are for the showcase; there are a lot more on our work page.

We don’t just write code, we build products that meet legal and technical standards from day one. If you’re still not sure you’re compliant, we can help you figure out where you stand and what to do next.

.avif)