Do you remember when most large language models (LLMs) used to say, “My knowledge cutoff is 2021”? That’s no longer an issue: thanks to a newer AI architecture called retrieval augmented generation, or RAG.

Many businesses today are asking whether they can build generative AI-powered tools, like chatbots, that have access to their internal data and documentation. That’s exactly where RAG comes in. Instead of relying solely on the static, general knowledge LLMs are trained on, RAG lets you augment those models with the specific information your business needs.

I’m Oleh Komenchuk, ML Department Lead at Uptech. In this blog, I’ll walk you through what RAG is, how it influences business decision-making, where it’s already making an impact across industries, and most importantly, how to get started.

What is RAG in AI?

Retrieval Augmented Generation, or RAG, is an AI architecture designed to improve the performance of large language models as it helps connect them to external sources of information. Instead of generating answers solely from their fixed training data, RAG systems can look up relevant content in real time, whether it’s a knowledge base, document repository, or internal company database.

This helps solve a key limitation of LLMs: their knowledge becomes outdated the moment training ends. For instance, a model trained on data up to November 2023 might launch in mid-2024, without any knowledge of recent changes, regulations, or product updates.

Main types of RAG systems

There are several types of RAG implementations, depending on the level of complexity and business needs. While the categorization goes as far as 20+ types, we’ll highlight only a few key ones:

- Naive RAG. This AI RAG model has a simple setup where the system retrieves top-matching documents and sends them straight to the LLM without much processing.

- Advanced RAG. As the name suggests, this type uses more robust ranking, chunking, filtering, and re-ranking strategies to improve the quality of retrieved content.

- Hybrid RAG. This is a RAG workflow that combines different retrieval methods (e.g., keyword-based and vector-based search) for better accuracy.

- Agent-based RAG. This RAG system involves AI agents that perform multi-step reasoning and dynamically retrieve and use new information throughout a task.

Agent-based RAG is getting a lot of traction right now, and for good reason. It’s one of the biggest AI trends today, and we think it’s worth taking a closer look at how it works and where it fits in. Unlike classic RAG techniques, where the system straightforwardly retrieves information, agent-based RAG adds an autonomous layer that manages the entire process.

Think of it as a smart decision-maker inside the system.

Let’s say you connect your RAG setup to three sources: emails, PDF documents, and Google Calendar. A user asks, “When is my meeting with the client?” The agent doesn’t just pass this to the model — it first figures out which data source to use. In this case, it identifies that the answer likely sits in Google Calendar, calls the right tool, and returns the result.

This setup makes RAG systems more flexible, allowing them to complete complex tasks by deciding how to approach each query, what tools to activate, and what data to pull, step by step.

How RAG works (with the RAG diagram)

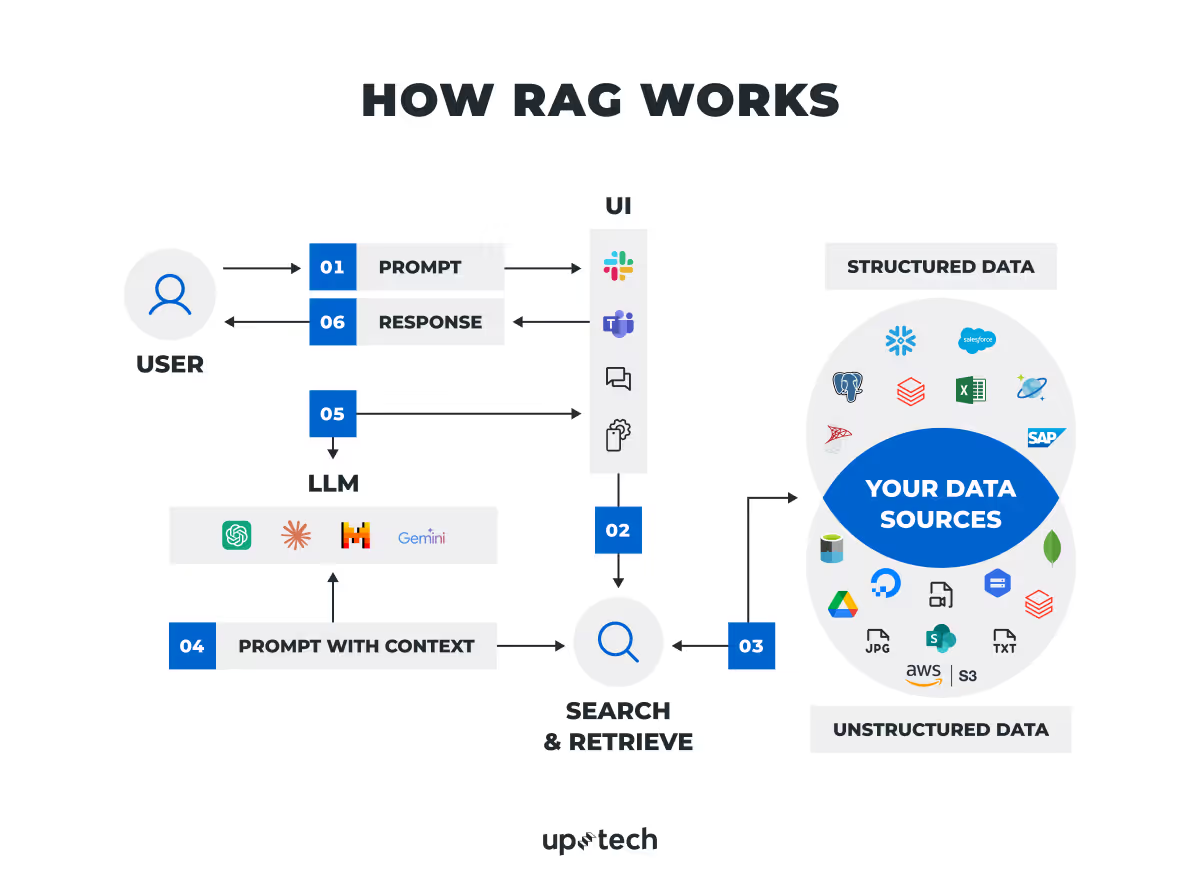

Retrieval augmented generation follows a clear process that connects your data with an AI model to deliver relevant, up-to-date answers. Here’s how it works in practice (look at the RAG diagram below):

- User prompt. A user enters a question or request through a familiar interface, like Slack, Microsoft Teams, or a company’s internal tool.

- Context retrieval. The system takes that prompt and searches across connected data sources, both structured (like Salesforce, SAP, or SQL databases) and unstructured (PDFs, DOCs, Google Drive, SharePoint, and more). It pulls only the most relevant documents or pieces of information.

- Prompt with context. The original query and the retrieved data are bundled together to create a single, enriched input for the language model. This gives the AI everything it needs to generate a response grounded in the latest, most accurate information available.

- LLM generation. A large language model (e.g., ChatGPT or Gemini) processes this enriched prompt and generates a response that’s both fluent and context-specific.

- Response delivery. The final response is sent back to the user in the same interface they started from, Slack, Teams, chat widget, or wherever the conversation is happening.

The RAG workflow helps businesses build AI systems, like AI chatbots or virtual assistants, that can actually use their internal knowledge, not just guess based on outdated training data. It’s fast, scalable, and designed to make AI useful in real-world environments.

Speaking of which, the next section will show you the versatility of the RAG approach and real-life examples.

RAG Use Cases Across Industries

I know from my own experience as a machine learning engineer that during the development of LLM apps for business use, we can’t just rely on default model behavior. In many cases, RAG is a must-have. RAG systems are already proving useful in healthcare, fintech, and automation-heavy industries like e-commerce.

Below are real examples of how companies integrate RAG into their operations to work with internal data and reduce hallucinations in AI outputs.

Healthcare: From patient support to clinical decision-making

RAG gives healthcare providers the ability to build tools, like internal virtual healthcare assistants, that pull reliable, up-to-date answers directly from treatment protocols, research papers, or clinical documentation, among other things.

A private clinic or hospital, for example, may want a support chatbot that answers patient questions based on real medical documents, not generic internet sources. Without the RAG technique, even the best LLM would struggle to answer correctly or provide references. With RAG, on the other hand, the system retrieves relevant documents and ensures that responses reflect accurate, up-to-date information.

Internally, RAG can also be used to:

- Pull data from electronic health records (EHRs) and confidential sources that LLMs were not trained on

- Extract and synthesize key points from large volumes of clinical documentation

- Support clinicians in diagnostics and treatment planning based on guidelines and patient-specific data

One example is Apollo 24|7, a healthcare platform that integrated Google’s MedPaLM with RAG to build a Clinical Intelligence Engine (CIE). The system gives clinicians access to de-identified patient data, recent studies, and treatment guidelines. All this information is retrieved and summarized in real time to support diagnostic decisions.

Fintech: Virtual agents and internal knowledge access

In fintech, RAG can be used to create virtual assistants that connect directly to internal databases, financial documents, and policy archives.

One great example is the Royal Bank of Canada (RBC). They had a problem: internal policies were scattered across PDFs, spreadsheets, and web platforms. Finding the right information took too long and often led to mistakes.

To solve this, they built Arcane, a RAG-based chatbot. A specialist types a question into the chat. Arcane searches the internal systems, pulls up the right answer, and adds links to the original documents.

The hardest part was the messy data. Information is stored in different formats and locations. RAG helped the team layer a search system on top of it, making everything easier to access.

Automation: Analytical tasks and report summaries

There are many RAG applications in process automation, e.g., internal analytics and reporting workflows. For instance, Grab, a multi-service platform in Asia, built a RAG-based system that connects LLMs with internal APIs and prompt templates to generate summaries and reports automatically. These reports are delivered directly in Slack, and Grab claims this saves around 3-4 hours of manual work per report.

From my perspective, this kind of setup makes a lot of sense for companies that deal with repetitive data tasks, e.g., weekly reports, KPI tracking, or fraud investigations. Instead of assigning these tasks to analysts each time, a RAG system can pull the relevant data, summarize it, and send it to the right team or person.

I believe RAG is especially useful when:

- You already have structured data (SQL databases, dashboards, APIs)

- The same types of reports are generated regularly

- Teams need fast access to insights without depending on engineers or analysts

RAG automation helps reduce bottlenecks, improves knowledge access across teams, and frees up specialists to work on higher-impact tasks.

Key Benefits of RAG Models for Business

The RAG systems change the game for how companies use AI to make decisions. The difference compared to classic models is simple: instead of relying only on outdated, hardcoded knowledge, RAG brings in current, relevant data on demand. And this shift solves several real problems.

- Up-to-date, domain-specific answers. RAG lets you feed fresh, relevant information into the model. You can keep the system aligned with current data, without retraining anything.

- Lower hallucination risk. Classic models rely on static training sets. RAG pulls from sources you control, so the output is less likely to include made-up facts. It can even add source links to show where the answer came from.

- More relevant, personalized responses. RAG allows the model to access user-specific or case-specific information, which helps tailor answers to the exact request.

- Lower cost. You skip the high compute costs of fine-tuning. You just connect the data you already have.

- More trust in AI. When users see that the answers match internal documents and include citations, they’re more likely to trust what they see.

In other words, in 2023, building something like a chatbot that answered based on internal documents took serious time, money, and resources. A client would come in with a specific use case, and the only way to adapt an open-source model was to fine-tune it on their data.

That meant extra infrastructure, long dev cycles, and high costs.

Now, RAG makes this process easier. You don’t need to fine-tune a model anymore. Instead, you connect the LLM to your own data sources, PDFs, Word docs, spreadsheets, databases, or even links to websites.

The model doesn’t have to learn the data. It just pulls what it needs at the moment of the request. This approach saves time and makes AI tools easier to adapt to different use cases.

RAG and Data Security: What Businesses Need to Know

One of the key benefits of RAG is its ability to access external data sources. But with that comes a serious consideration: security. Unlike traditional LLMs that only rely on pre-trained knowledge, RAG systems work directly with company-specific documents, databases, and files. This can include confidential or regulated information, especially in industries like healthcare or finance, with strict compliance requirements like HIPAA, GDPR, and many more.

To use RAG safely, businesses need to understand how and where the model runs. There are two main approaches:

1. Using API-based RAG models (OpenAI, Claude, Gemini)

This is the most accessible option. You use a hosted model via API, and all operations happen on the provider’s infrastructure. The upside is quick setup and zero infrastructure costs on your end.

The downside: you don’t control where the data goes. Providers like OpenAI say they don’t store or use data from API requests, but there’s no absolute guarantee. This makes API-based models a risk when you operate sensitive or regulated data.

2. Hosting the model in your own environment

If data privacy is critical, it’s better to keep everything under your control. This approach comes in two variations:

2.1 Hosting open-source models in a private cloud

You can use models like LLaMA or Mistral and deploy them in your own secure environment, for example, on Azure or AWS, or another private cloud. Your data stays within your infrastructure, and no third party touches it.

The tradeoff: cost. Running large models on GPU instances is expensive (starting around $1.50/hour), and maintaining the infrastructure requires extra effort.

2.2 Using Azure OpenAI Services

This is a middle ground. You still use OpenAI models, but they run inside Microsoft Azure, isolated from OpenAI’s infrastructure. It’s a pay-as-you-go model; you only pay for the usage of your private endpoint. According to Microsoft’s official documentation, data stays within the Azure environment and does not reach OpenAI.

So, what’s the best choice?

- For non-sensitive use cases, API-based RAG setups are fast and cost-effective.

- For anything involving private documents, personal data, or compliance, you should host the model yourself or use a cloud provider with strict data boundaries (like Azure OpenAI).

When RAG is set up with the right security model, it becomes a powerful and safe way to use your company’s data in AI applications.

How to Get Started with RAG?

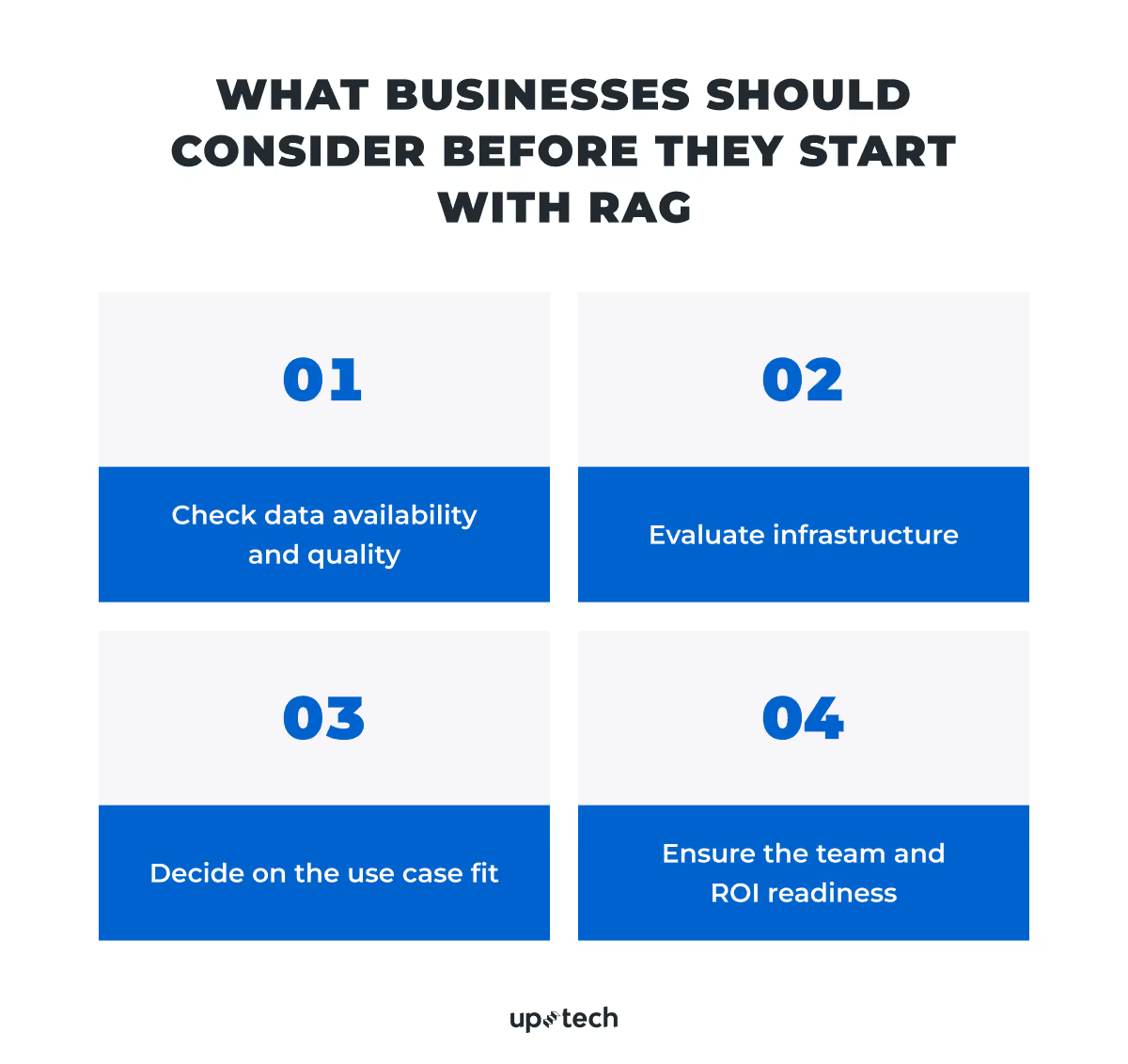

RAG is already a must-have for many businesses that work with generative AI. But before you jump in, it’s important to check whether your company is actually ready to implement it, and if it makes sense for your specific case. Here are a few recommendations.

Check data availability and quality

Make sure you have access to relevant, clean, and up-to-date information. Broken links, outdated content, or missing data will affect the output quality. RAG doesn’t fix bad data, it just exposes it faster.

Evaluate infrastructure

Your data sources need to be hosted somewhere that the RAG system can reach. Whether it’s a database, internal wiki, or cloud storage, your setup must be technically stable, secure, and scalable.

Decide on the use case fit

RAG is powerful, but it’s not always the right tool. If you only need to pull from a single, fast-changing data source, you might not need full RAG at all. In some cases, a regular LLM with a dynamic prompt will do the job just fine. Especially now, with models like GPT-4.1 offering large context windows (up to 1 million tokens), basic use cases can be covered without extra layers.

The RAG models, on the other hand, are better for static information that doesn’t change daily.

Ensure the team and ROI readiness

Make sure your team is ready to manage a GenAI product across engineering, data, and security. And be clear on the expected return. RAG systems aren’t magic; they work when the business problem and the data setup are a match.

RAG will keep evolving, and so will the models themselves. But today, if your business deals with a lot of internal knowledge and needs AI to work with it safely and reliably, RAG is no longer optional. It’s the foundation.

At Uptech, we don’t push AI for the sake of the trend. We evaluate your data, infrastructure, and goals, and only suggest RAG or other solutions when they truly make sense. You get clear recommendations backed by real expertise, not hype.

.avif)