Have you ever had a situation where you asked an LLM-powered chatbot, like ChatGPT, a question and got an answer that was too generic? At best, generic. At worst, you were misled by the incorrect information. Despite their power, large language models (LLMs) still provide outdated information or even make it up. From the business point of view, that’s not something you can rely on in your everyday operations.

One of the most effective ways to improve LLMs is Retrieval-Augmented Generation (RAG). With this approach, LLM outputs rely on trusted data sources such as company policies or documents. This way, the whole new world of RAG use cases and business benefits opens up.

I am Oleh Komenchuk, an ML Department Lead at Uptech. In one of our previous blog posts, I have already touched on the topic of RAG. The focus of this particular article is on the benefits RAG brings to businesses and all the possible use cases.

Stay tuned!

What is RAG, and Why Might You Need It?

Retrieval-augmented generation (RAG) is an approach in generative AI development that combines the language understanding capabilities of large language models (LLMs) with real-time, external data retrieval. Because RAG adds context and relevance, it enables AI to make better sense of information and provide more accurate, up-to-date, and context-aware answers

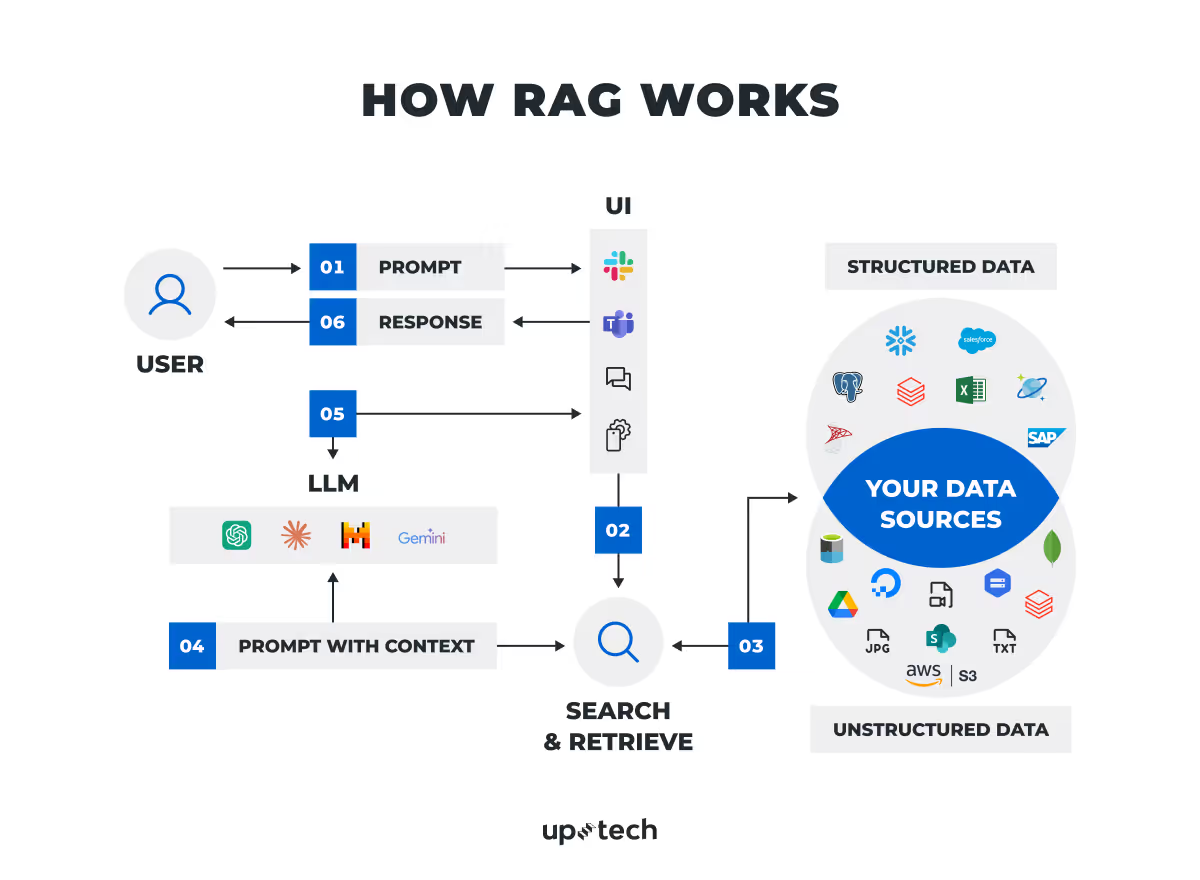

So, how does RAG work?

We have a whole blog post dedicated to retrieval augmented generation, so make sure to check it out if you want to learn more. Here, I will explain things in a nutshell.

RAG connects LLMs to the external knowledge bases and data sources. This can be as structured data (e.g., databases, spreadsheets, CRM systems) as unstructured data (e.g., Word docs, emails, or web content). By doing so, RAG identifies useful and relevant information, combines it with the user’s question, and then passes it to the language model.

Not only does RAG help language models give more accurate and reliable answers, but it also reduces the risk of AI “hallucinations.” That said, when a system can pull information from trusted sources like encyclopedias and databases, it grounds every answer in verifiable facts. This prevents the model from inventing details or presenting false statements with confidence.

In research-heavy fields, a single incorrect claim can waste time or even cause financial or safety risks. With RAG, teams can trust that the output reflects up-to-date and factual knowledge rather than guesses from a static model.

The Benefits of RAG for Businesses

RAG offers more than accurate answers and fewer hallucinations. It gives companies stronger data protection, real-time access to knowledge, and more efficient use of resources.

According to Grand View Research, the global market was valued at $1.2 billion in 2024 and is forecast to reach $11 billion by 2030, with a compound annual growth rate of 49.1% between 2025 and 2030. USD Analytics offers an even larger outlook, projecting growth from $1.6 billion in 2025 to $32.6 billion in 2034.

This rapid expansion reflects the enterprise demand for domain-specific AI that is context-aware and reliable. Retail and e-commerce companies use RAG for personalization and better customer engagement. Healthcare and finance apply it to support precise decision-making in sensitive areas. Media, IT, and education also integrate RAG to improve content production, automate support, and enrich learning.

Across Asia Pacific, Europe, and the Americas, emerging markets show some of the strongest growth potential, which makes RAG a strategic business move in addition to a technology choice.

Strong security

For regulated industries, RAG can be the factor that makes AI safe enough to adopt at scale. Unlike standard training of an LLM, RAG does not embed confidential material directly into the model. Instead, it retrieves knowledge from secure repositories, keeps intellectual property safe, and reduces the risk of leaks.

Real-time relevance

LLMs trained on static data quickly become outdated: That’s when the model may respond with “my knowledge cutoff is 2023.” RAG, on the other hand, keeps outputs tied to the latest information. For businesses, it means that they can respond to regulatory changes, market updates, or scientific discoveries without the need to retrain their models again and again. This brings us to the next massive advantage – cost-effectiveness.

Cost efficiency

As said, fine-tuning large models on new data is both expensive and time-consuming. RAG avoids this process since it incorporates fresh information at query time. Thanks to this, companies can update their systems without repeated training cycles, which reduces costs and shortens development timelines.

Domain-specific precision

With RAG, AI models can integrate with specialized knowledge bases. Healthcare providers can draw from medical research, financial institutions from compliance documents, and legal firms from case law. This produces answers that are tailored to the unique standards of each industry. As a result, you get precision and reliability.

Scalability with complex data

Many enterprises manage vast collections of structured and unstructured data. RAG can connect across multiple repositories, process diverse formats, and still provide coherent answers. While integration adds some complexity, the system remains scalable enough to handle growing volumes of data over time.

Trust and control

RAG can provide source attribution by pointing to the documents behind its answers. This traceability increases user confidence, allows teams to validate outputs, and meets audit requirements. In industries such as finance, healthcare, and law, that level of accountability is not optional but a necessity.

These are only some of the reasons to adopt this technology. But what about real-life RAG applications? Do they actually exist? The answer is yes, and there are plenty. From practical case studies that show how companies already use RAG in their operations to research papers and even community discussions on Reddit, the examples keep growing.

Below, we gathered some of the most interesting RAG examples worth exploring.

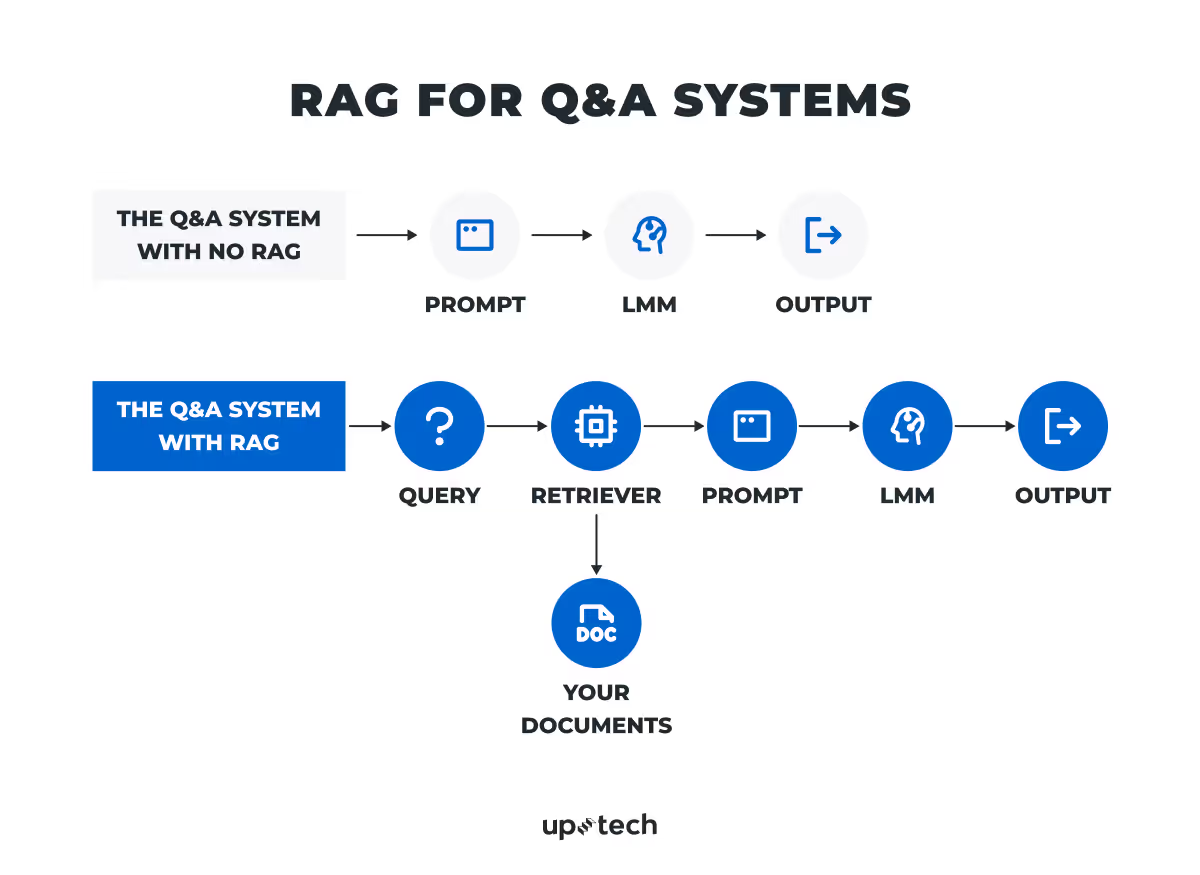

RAG Use Case 1: Advanced Question-Answering Systems

The first RAG use case is a Q&A system. In a question-answering system, the retrieval component identifies the most relevant documents or passages, and the generation component produces clear, detailed answers based on that content.

In research-heavy fields like pharma, this advantage is especially clear. R&D teams need quick access to vast collections of biomedical papers, patents, and conference abstracts. Traditional literature search provides long lists of articles that scientists must read and summarize manually. A RAG system changes the process: instead of sorting through dozens of papers, a researcher can ask, “What do recent studies say about biomarker ABC in lung cancer?” The system retrieves the most relevant literature and generates a synthesized answer, often with citations.

Practical examples already exist. BioRAGent, an interactive biomedical QA system, demonstrates how scientists can pose complex questions and receive answers linked to source snippets from PubMed. Another system, PaperQA, was evaluated on PubMedQA, a benchmark of biomedical questions. GPT-4 alone reached an accuracy of 57.9%. With RAG, PaperQA achieved 86.3% accuracy, a 30-point increase. This performance gap highlights how retrieval makes answers far more reliable and directly useful for experts.

RAG Use Case 2: Virtual assistants

Here comes another interesting RAG application: It can support virtual assistants that move beyond scripted replies. Instead of relying on fixed knowledge, the system retrieves current information such as events, news, or weather, and gives natural answers tailored to the user’s request. This makes the interaction more precise and more useful.

A discussion on Reddit shows how this might work in practice. A user described an AI agent that responds to questions and executes tasks on the user’s behalf. For example, the user asks: “Create a lunch appointment with Chase for tomorrow.” The RAG-enabled assistant draws on personal context such as favorite restaurants, home address, time zone, and stored contacts. With this data, it creates the calendar invitation with the correct restaurant, location, and Chase’s email address.

Although still experimental, cases like this point to the future of RAG-powered assistants that can combine scattered data and deliver practical support.

RAG Use Case 3: Call Centers and Customer Support

Customer support teams face an enormous flow of questions every day, from simple account checks to complex service issues. Traditional chatbots often rely on pre-defined scripts and struggle with nuanced requests. RAG gives AI chatbots instant access to internal docs, ticket histories, and FAQs. Instead of canned responses, these assistants understand context and deliver relevant help. When a company applies RAG in customer support, the AI first searches through support documentation and then provides an answer that aligns with current company guidelines.

JPMorgan Chase provides a strong example. The bank’s call centers handle millions of interactions each year across services such as fraud and claims, home lending, wealth management, and collections. To help employees manage this load, the company launched EVEE Intelligent Q&A, a GenAI solution built on RAG. EVEE allows specialists to ask questions and instantly receive concise answers drawn from internal documentation. The tool integrates with existing systems and has already improved efficiency, call resolution times, and both employee and customer satisfaction.

Here’s what JPMorgan Chase says about AI and RAG: “Moving into data and AI infrastructure, we highlight trends such as the evolution of Retrieval Augmented Generation (RAG) for integrating proprietary data into model responses and multi-agent systems.”

RAG Use Case 4: Document summarization

Document summarization condenses long and complex texts into short, easy-to-digest versions. In a RAG setup, the retrieval component selects the most relevant sections of a document, and the generation component produces a summary that captures the key points. This ensures that the output reflects both the original content and the latest context available from external sources.

Here’s a strong real-life RAG example. Bloomberg applies the RAG approach to financial transcripts and earnings reports. The system uses dense vector databases and RAG to analyze every paragraph, then distills the findings into bullet points that highlight the most critical information. Users can also click through to see the original source, which keeps them in control and removes doubt about accuracy.

For financial analysts, this means faster access to key insights, more confidence in the data, and the ability to focus on why events happen rather than spending hours parsing lengthy documents.

RAG Use Case 5: Medical Diagnostics and Research

Healthcare produces enormous amounts of data. Doctors must balance electronic health records, clinical guidelines, and the latest research when they make decisions. No single person can process it all in real time. RAG can step in as a medical assistant that retrieves the most relevant information and provides doctors with clear, context-based answers.

One Reddit user described building a RAG service to navigate thousands of pages of internal healthcare documents. The tool made information accessible in seconds and proved so valuable that the organization plans to roll it out more broadly. This shows how even small-scale projects can set the stage for larger adoption.

Big players have also explored this space. IBM Watson Health applied RAG techniques to oncology, comparing patient data with vast medical literature. According to a study published in the Journal of Clinical Oncology, IBM Watson for Oncology was able to match treatment recommendations with expert oncologists 96% of the time. This highlights the potential of RAG to improve accuracy in diagnosis and help doctors design personalized treatments. The result is better patient care and less time spent digging through records.

RAG Use Case 6: Fraud Detection and Risk Assessment

Phone scams cause banks billions in annual losses, and the rise of realistic voice synthesis makes these attacks even harder to detect. Fraudsters can now mimic employee or customer voices convincingly enough to trick traditional security checks.

RAG applications provide a stronger defense. The University of Waterloo recently published a paper on a RAG-based system designed to address this challenge. Their approach combines real-time transcription of calls, identity validation, and dynamic policy retrieval. The system checks conversations against the most up-to-date internal rules and flags attempts to trick employees or customers into sharing sensitive data.

Unlike traditional models, it adapts to new policies without retraining, which makes it faster and more flexible. The study showed that RAG can catch fraud attempts with accuracy levels close to 98% on test data.

Beyond the lab, Mastercard has already applied RAG-based voice scam detection in production, reaching a 300% increase in detection rates.

RAG Use Case 7: Compliance Check

If you are in regulated industries, like healthcare and finance, you know that rules can change quickly and often. It is quite costly to stay current and difficult to apply requirements across processes. Missed updates raise audit risk, while delays increase expenses.

RAG can help you out here, too. There’s no need to chase documents or email the legal team. A RAG-powered assistant retrieves the latest internal policies and external regulations. It then delivers a clear answer with citations. This shortens resolution time and strengthens accountability. With RAG, compliance shifts from a reactive task to a proactive approach.

Ramp, a fintech company, shows how this works in practice. Their team replaced a fragmented, homegrown classification method with an RAG-based assistant that uses the NAICS standard.

NAICS provides uniform industry codes. These codes simplify categorization, improve reporting accuracy, and strengthen compliance checks. Ramp relied on authoritative documents to reduce manual reviews, improve efficiency, and create a smoother process for financial reporting.

RAG Use Case 8: Drug Discovery Support

RAG opens new possibilities for drug discovery because it solves one of the field’s biggest hurdles: the need to keep AI models updated with fast-growing biochemical data. Traditional fine-tuning of domain models is expensive. It also loses relevance quickly as new results appear.

A recent study introduced CLADD, a framework that uses RAG to connect large language models with external biochemical databases and knowledge graphs in real time. This setup gives scientists immediate access to the latest research without the need to retrain the models. It allows accurate predictions about drug properties, protein targets, and toxicity.

CLADD organizes several agents, each responsible for a separate task, and integrates their outputs into a clear answer. Because the system grounds every step in external knowledge, it avoids the pitfalls of static models and improves transparency. Compared with both general-purpose and domain-tuned LLMs, RAG-powered CLADD showed stronger results across multiple tasks. For pharmaceutical companies, the outcome is more accurate decisions, faster discovery cycles, and lower development costs

RAG Use Case 9: HR and Onboarding Assistants

Starting a new job is often overwhelming. A study by HR Bartender found that 64% of new hires decide whether to stay or leave within the first 45 days. A SHRM study in 2024 found that companies using advanced onboarding solutions helped employees reach full productivity 33% faster than those without structured support.

In many cases, new employees spend their first week buried in PDFs and chasing HR for simple answers about vacation policies or benefits. A RAG assistant can change that. It can chat with employees in plain language and pull answers directly from the most recent HR documents, handbooks, and onboarding materials. No one wastes time digging through files.

Think about how often HR teams answer the same questions: “How do I request time off?” “Where is the expense policy?” “Who handles IT issues?” A RAG system provides instant, verified answers and allows HR to focus on real people matters instead of acting as a search engine.

The biggest impact comes during onboarding. Instead of overwhelming new hires with a flood of information, the RAG assistant guides them step by step. It covers administrative tasks, tool setup, and contacts for each department. New employees become productive faster, and every hire receives the same level of support, whether they start in New York or Tokyo.

RAG Use Case 10: IT Support and Troubleshooting Bots

In many companies, IT support still relies on flowcharts and long back-and-forth exchanges of tickets with employees. Users often struggle to describe their issues, and standard scripts fail when the problem falls outside common scenarios. This slows down resolution and leaves IT teams dealing with the same questions again and again.

RAG offers a new approach. A RAG-based assistant draws on internal documentation, ticket history, and system logs to give support agents context. Instead of repeating generic advice, it delivers answers that match the actual problem, even if the user phrases the question in an unusual way.

Another advantage is pattern recognition. By analyzing past cases, the system can suggest fixes before issues escalate. It can also connect to monitoring tools, interpret system alerts, and recommend solutions that have worked before in similar situations. With this setup, IT teams reduce repetitive troubleshooting and shift their focus to preventing problems instead of constantly reacting to them.

How Uptech Can Help You with RAG Implementation

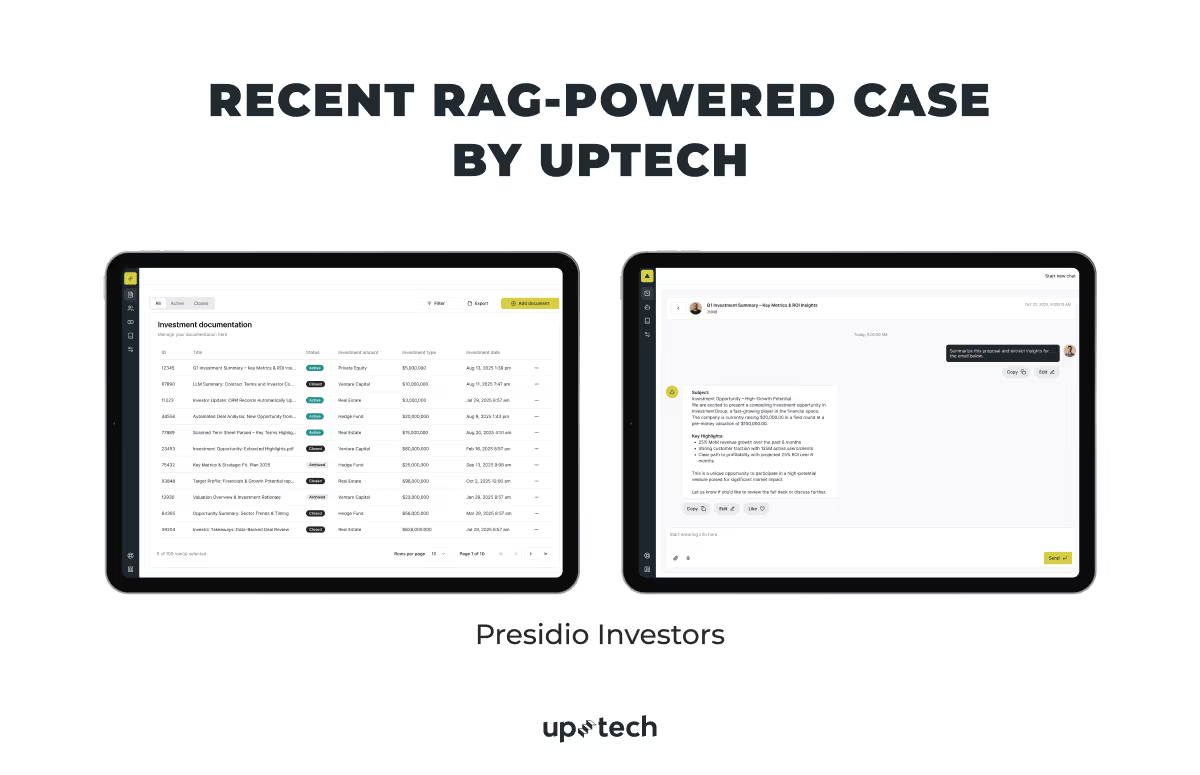

At Uptech, we have firsthand experience with AI projects across different sectors: whether they are regulated fields such as healthcare and fintech, or e-commerce and real estate. Each product we build comes with its own challenges: budget, technical limits, compliance requirements, and business goals. But despite challenges, the focus on creating solutions that combine accuracy, security, and flexibility never changes. RAG is a natural extension of this expertise.

One of the examples where we implemented RAG is our work with Presidio Investors, a US-based fintech company that needed to automate the processing of investment data. We designed a secure system that uses AI to extract key details from emails and attachments, transform them into structured data, and update the CRM automatically. The result was an 80% reduction in manual work and the ability to process up to 100 investment deals each day.

We believe RAG is more than just one of the AI trends. It represents the future of how businesses will access and use knowledge: reliable, context-aware, and secure. Whether you want to implement RAG in customer support, healthcare, compliance, or financial systems, our team has the experience to turn it into a working solution that meets both technical and business needs.

.avif)